Contents

Our main research interests include:

- computer vision, surveillance:

crowd analysis, crowd counting, crowd tracking, visual object tracking, multi-view vision, dynamic textures, motion segmentation, motion analysis, image captioning and annotation, image retrieval. - machine learning, pattern recognition:

probabilistic graphical models, deep learning, Bayesian models, Gaussian processes, active learning. - explainable AI (XAI):

gradient-based attribution methods, user trust - eye gaze analysis:

modeling eye movements with hidden Markov models (HMMs), clustering HMMs, co-clustering, DNN+HMM - computer audition, music information retrieval:

semantic music annotation and retrieval, music segmentation. - data-driven computer graphics:

data-driven graphic design, machine learning for graphics.

In particular, we aim to develop machine learning models, such as generative probabilistic models and deep learning models, of images, video, and sound that can be applied to computer vision and computer audition problems, such as crowd monitoring, image understanding, and music understanding. Our current research projects are listed below.

Understanding Crowded Environments

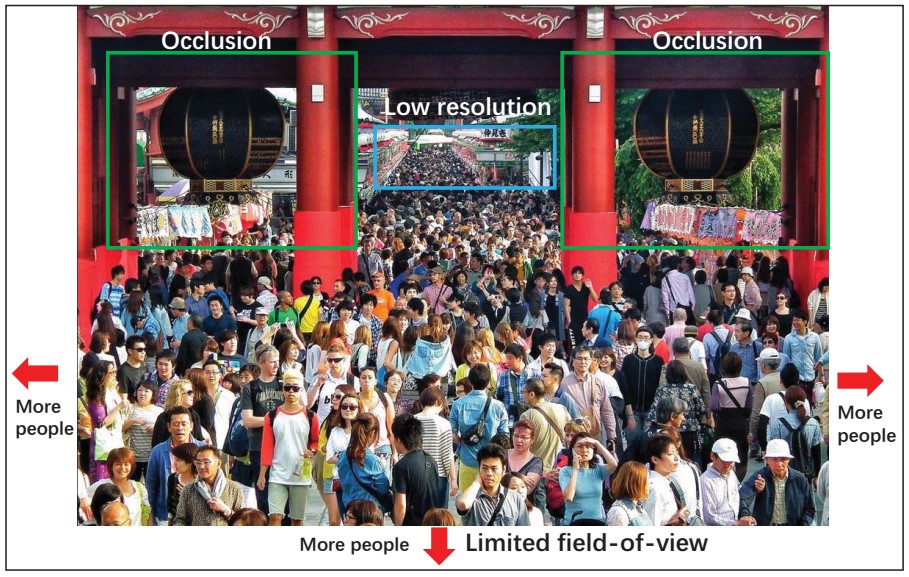

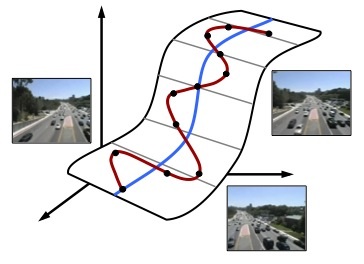

People counting, detection, and tracking in images/video of crowded environments, such as pedestrian scenes and highway traffic.

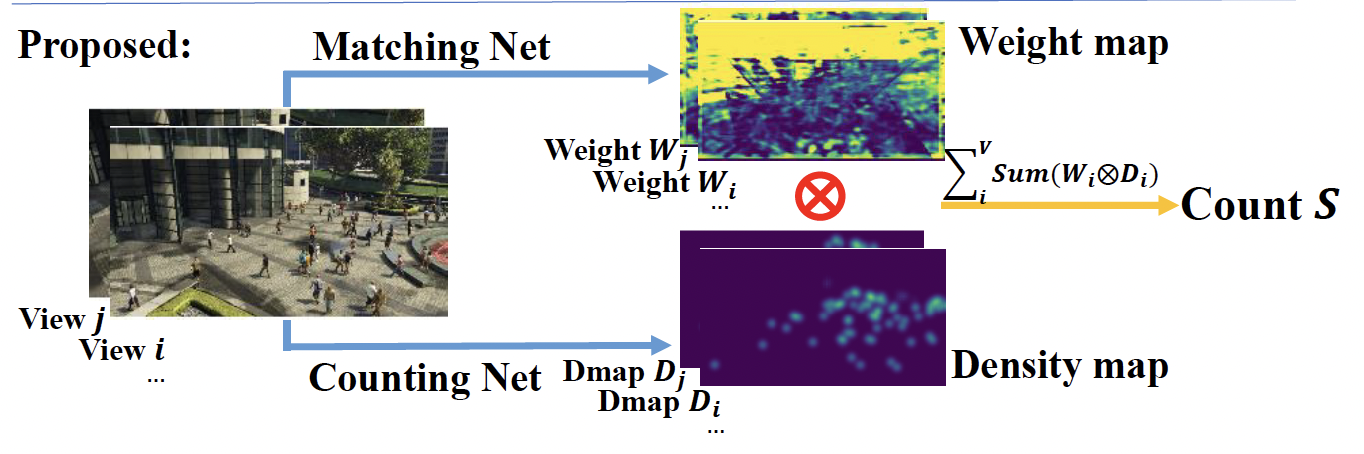

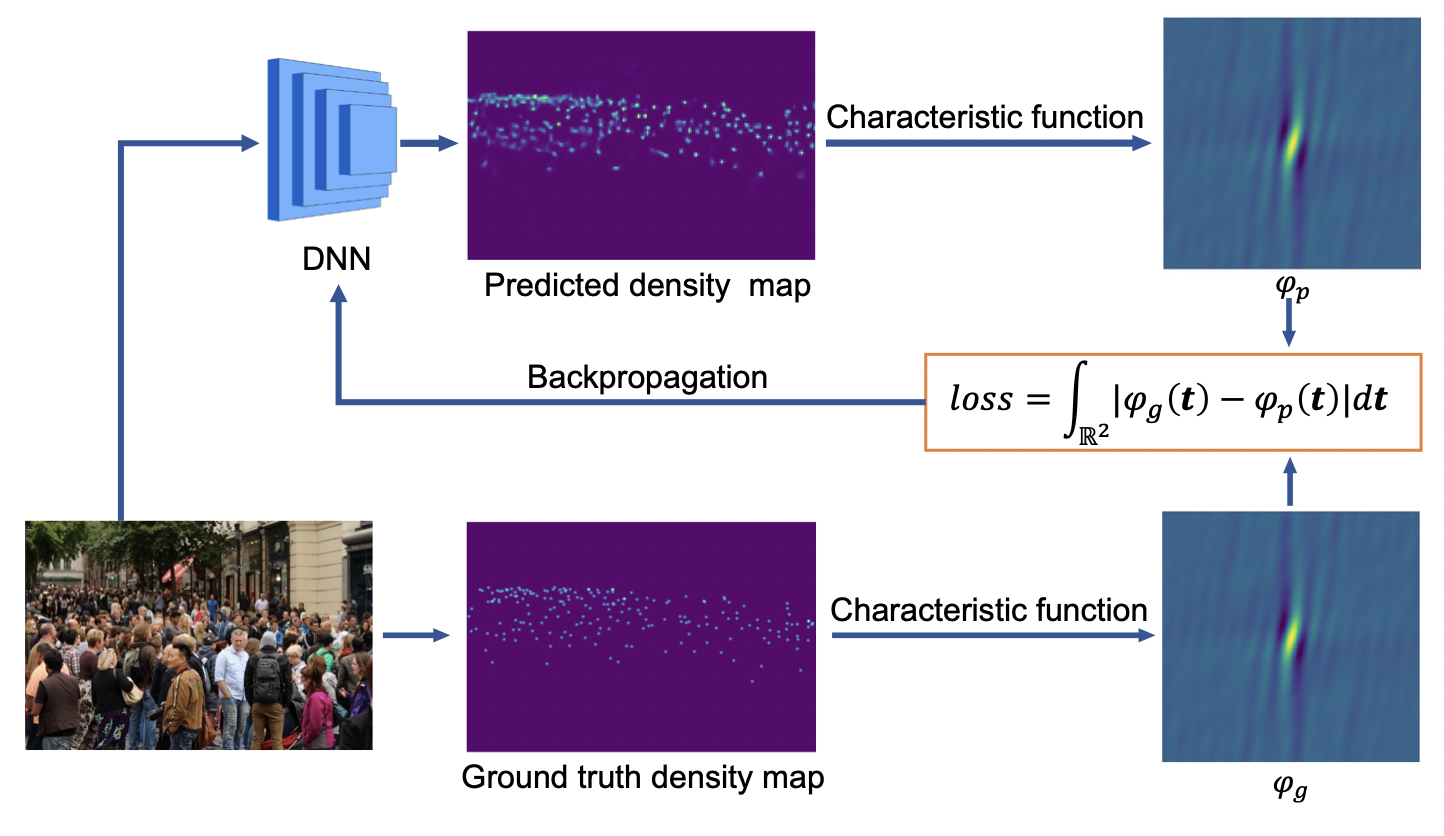

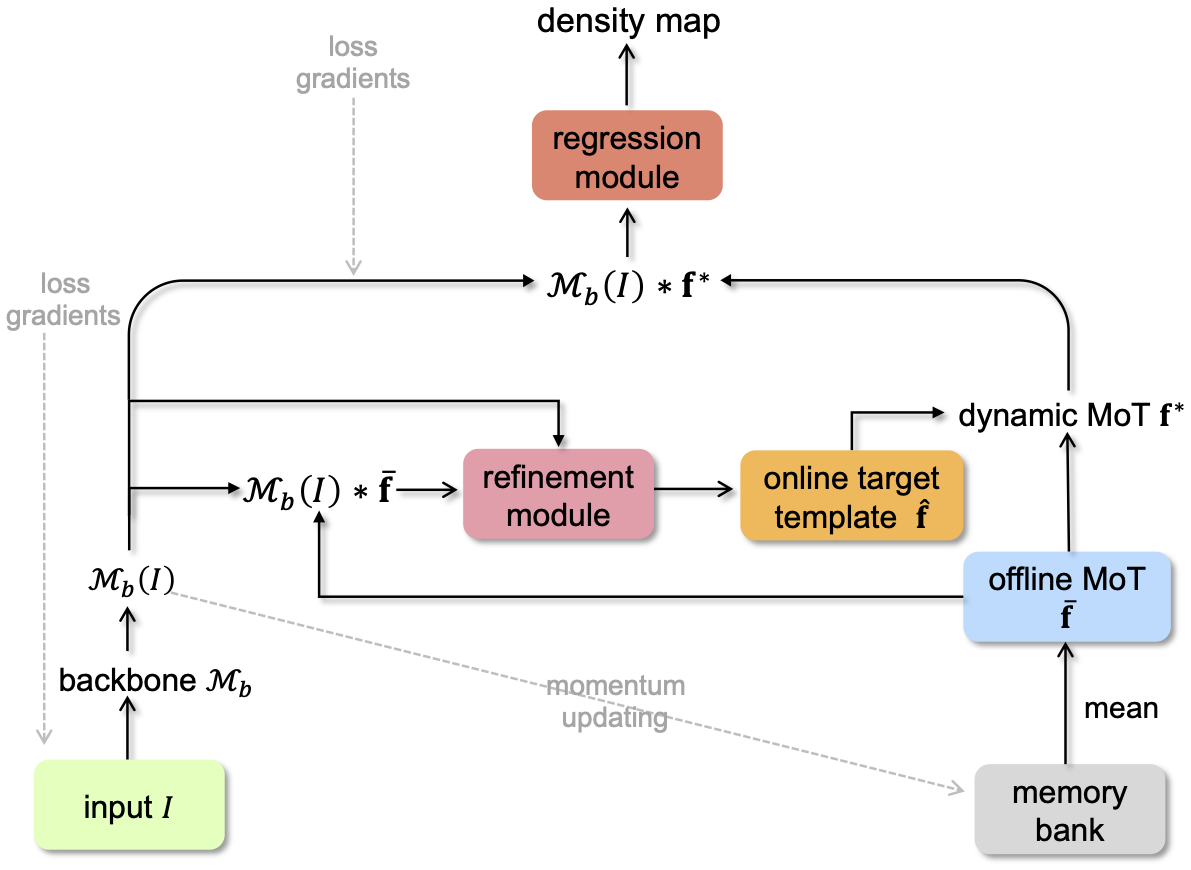

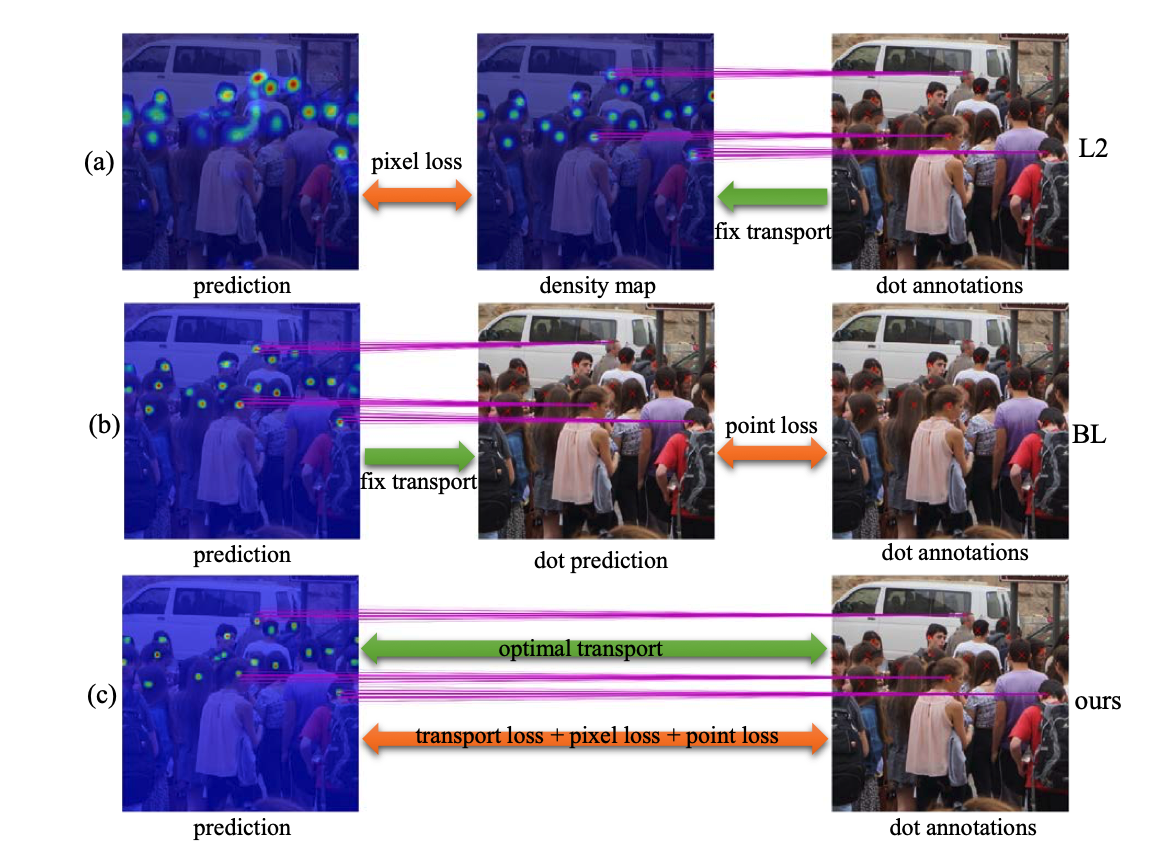

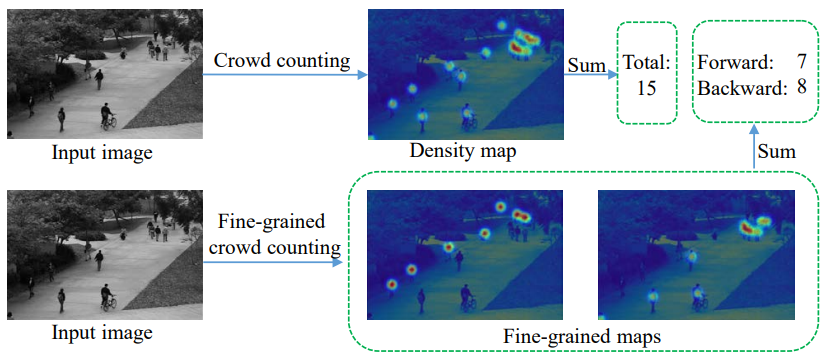

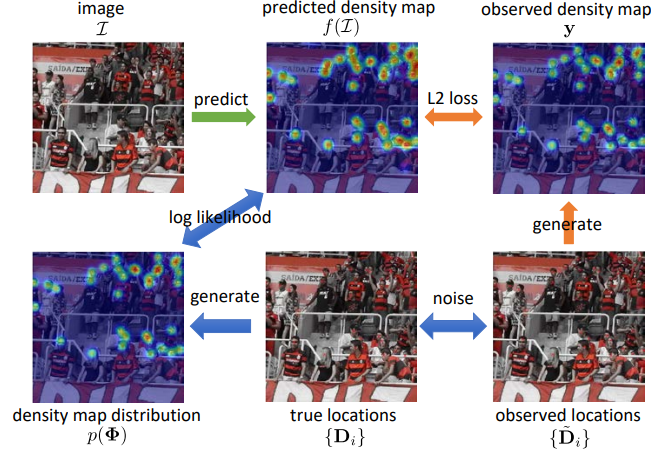

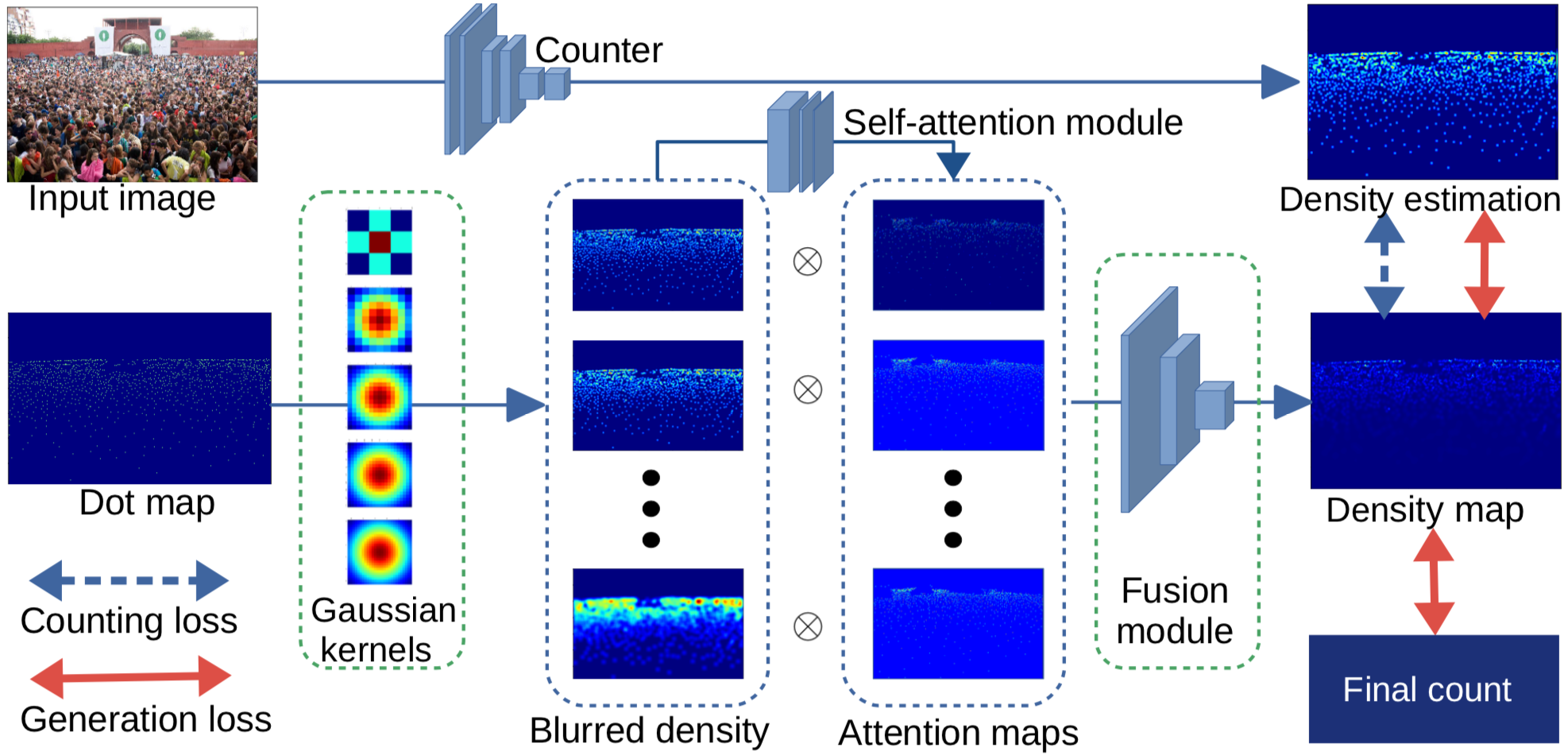

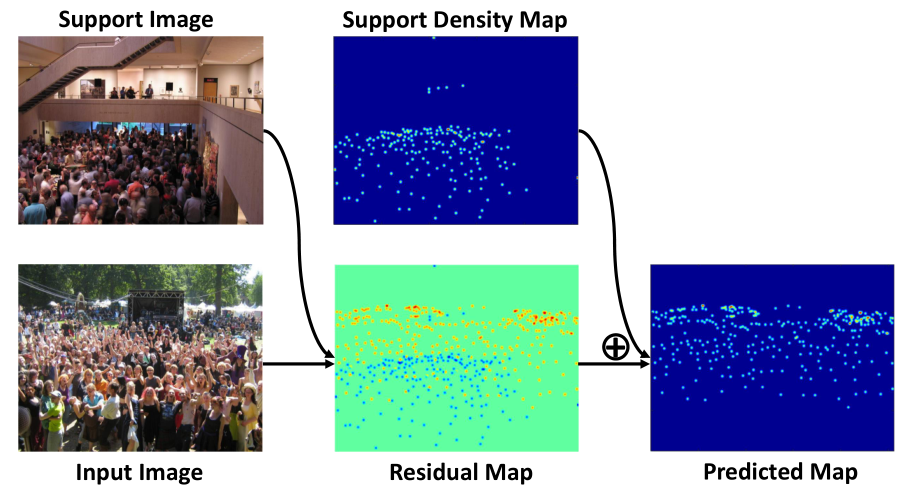

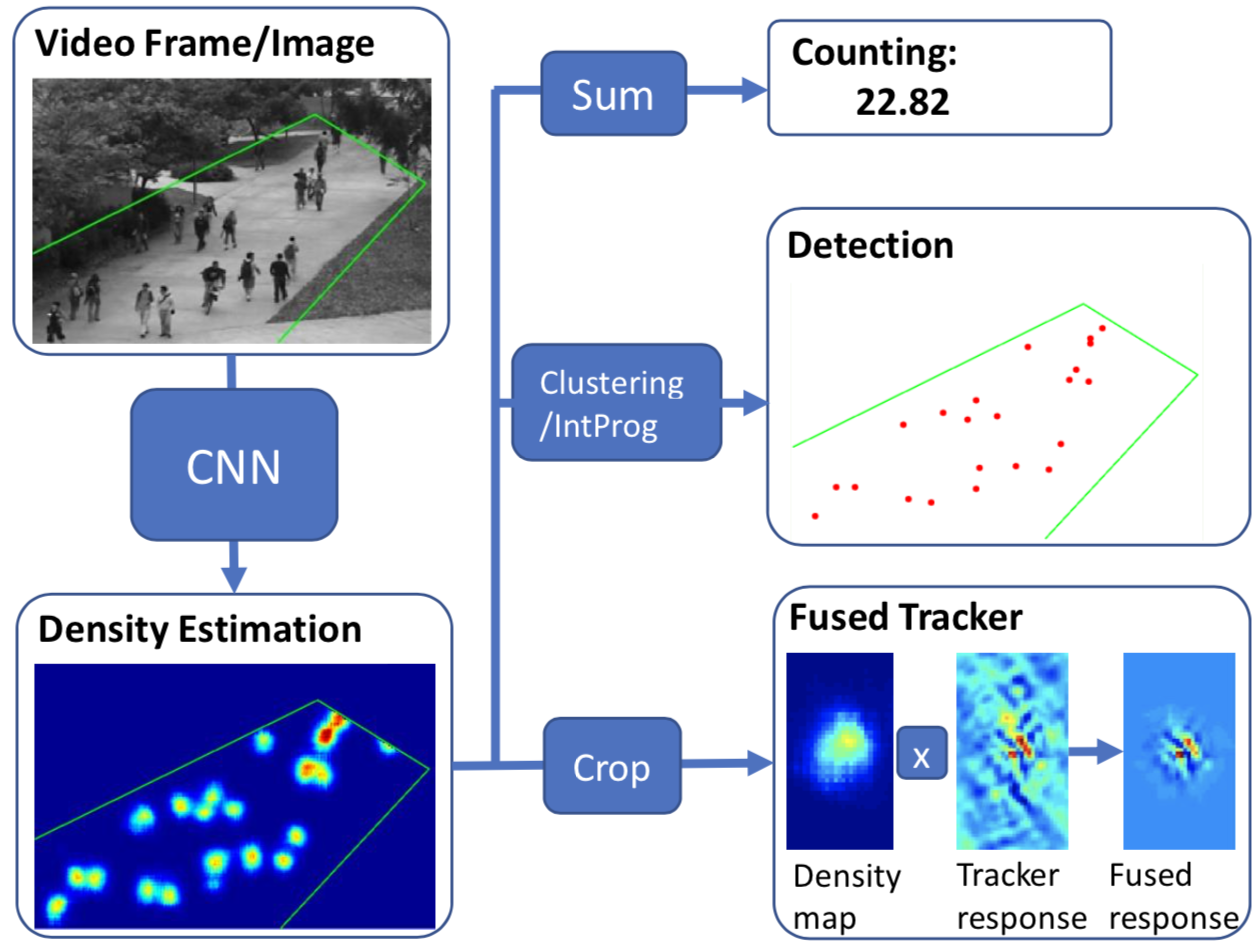

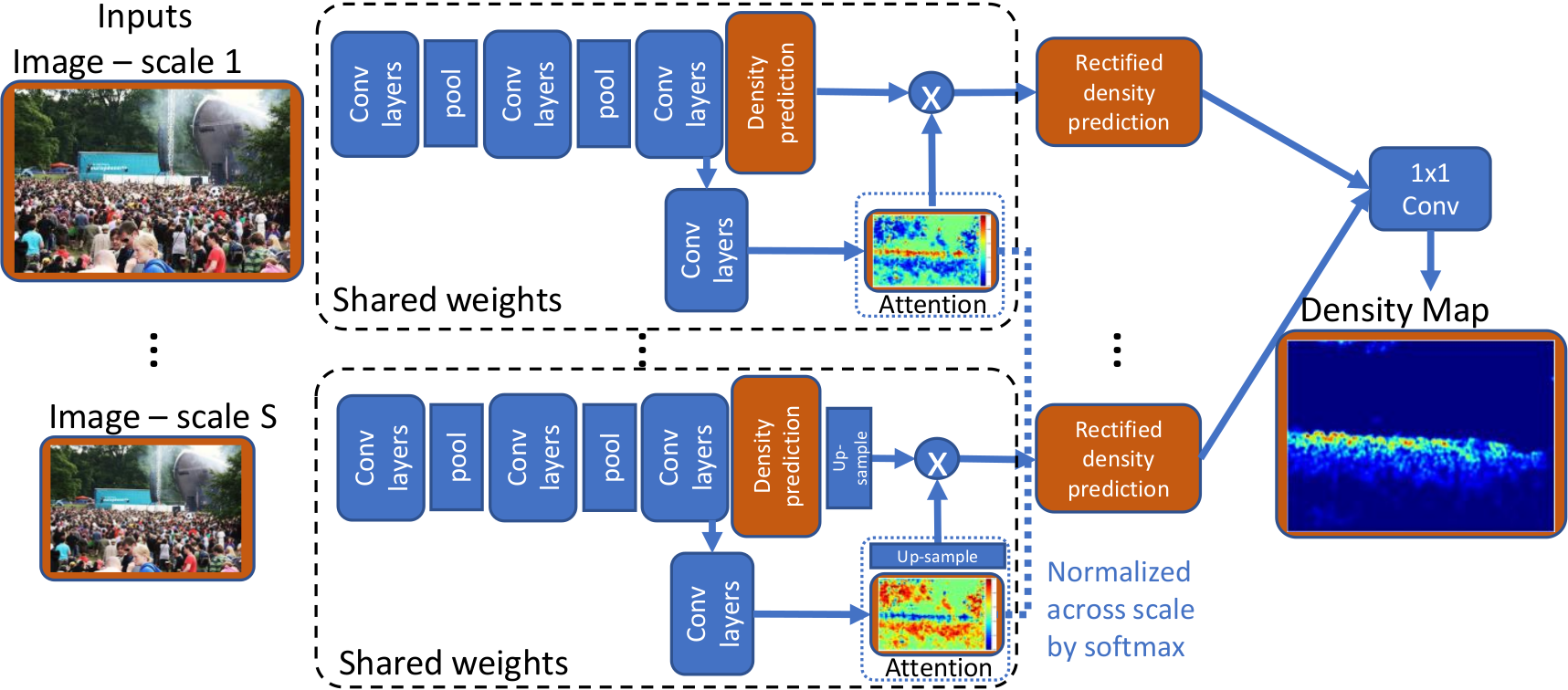

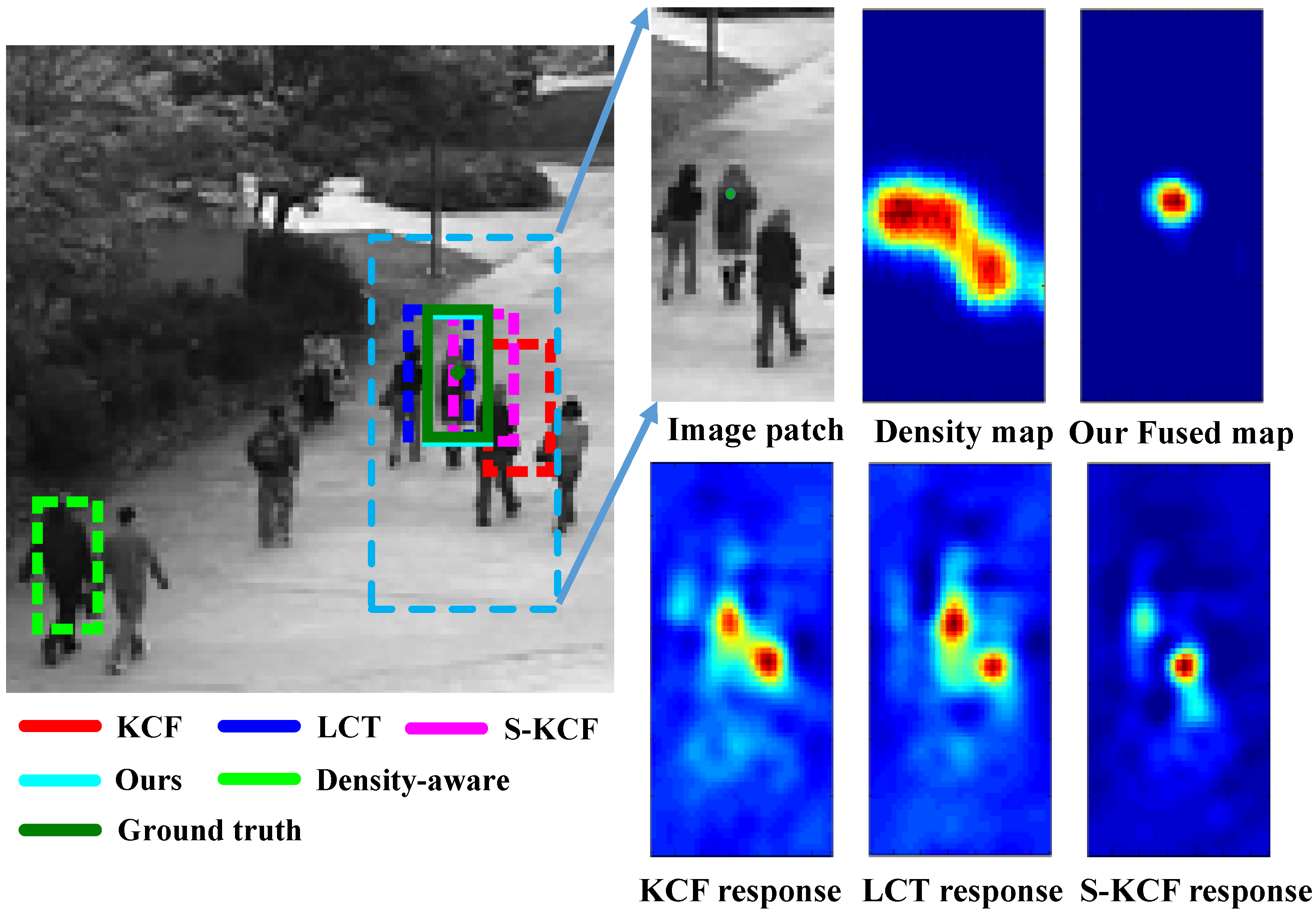

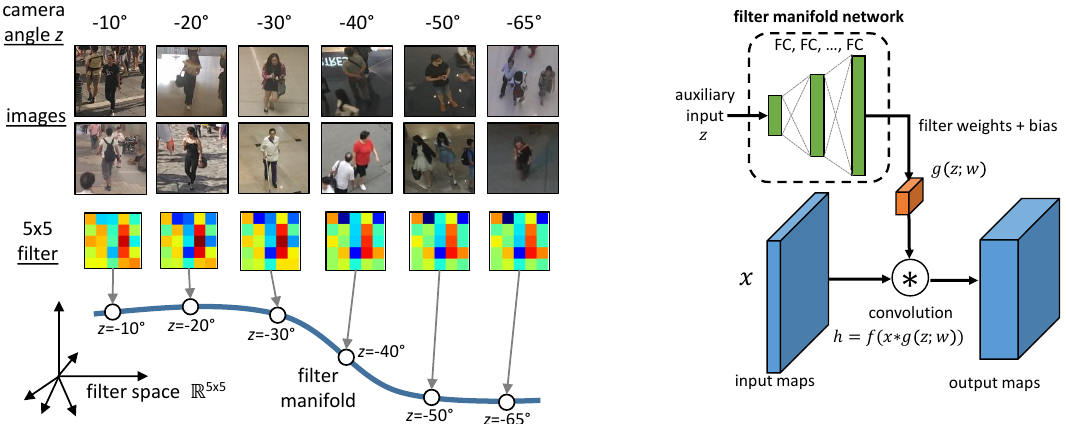

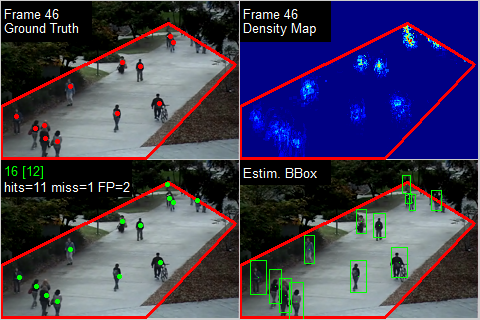

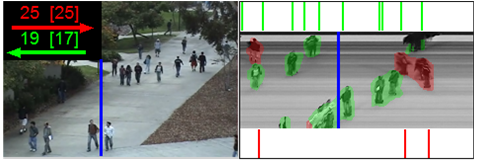

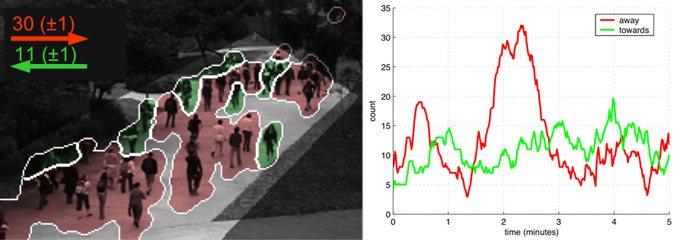

We propose a calibration-free multi-view crowd counting (CF-MVCC) method, which obtains the scene-level count as a weighted summation over the predicted density maps from the camera-views, without needing camera calibration parameters. We propose a synchronization model that operates in conjunction with existing DNN-based multi-view models to allow them to work on unsynchronized data. We derive loss functions in the frequency domain for training density map regression for crowd counting. We propose a novel Crowd Counting framework built upon an external Momentum Template, termed C2MoT, which enables the encoding of domain specific information via an external template representation. We propose a generalized loss function for density map regression based on unbalanced optimal transport. We prove that pixel-wise L2 loss and Bayesian loss are special cases and sub-optimal solutions to our proposed loss. Since the predicted density will be pushed toward annotation positions, the density map prediction will be sparse and can naturally be used for localization. In this paper, we propose a cross-view cross-scene (CVCS) multi-view crowd counting paradigm, where the training and testing occur on different scenes with arbitrary camera layouts. In this paper, we propose fine-grained crowd counting, which differentiates a crowd into categories based on the low-level behavior attributes of the individuals (e.g. standing/sitting or violent behavior) and then counts the number of people in each category. To enable research in this area, we construct a new dataset of four real-world fine-grained counting tasks: traveling direction on a sidewalk, standing or sitting, waiting in line or not, and exhibiting violent behavior or not. We propose a new multiple-object tracking (MOT) paradigm, tracking-by-counting, tailored for crowded scenes. Using crowd density maps, we jointly model detection, counting, and tracking of multiple targets as a network flow program, which simultaneously finds the global optimal detections and We model the annotation noise using a random variable with Gaussian distribution and derive the pdf of the crowd density value for each spatial location in the image. We then approximate the joint distribution of the density values (i.e., the distribution of density maps) with a full covariance multivariate Gaussian density, and derive a low-rank approximate for tractable implementation. Recently, an end-to-end multi-view crowd counting method called multi-view multi-scale (MVMS) has been proposed, which fuses multiple camera views using a CNN to predict a 2D scene-level density map on the ground-plane. Unlike MVMS, we propose to solve the multi-view crowd counting task through 3D feature fusion with 3D scene-level density maps, instead of the 2D ground-plane ones. In the sense of end-to-end training, the hand-crafted methods used for generating the density maps may not be optimal for the particular network or dataset used. To address this issue, we propose an adaptive density map generator, which takes the annotation dot map as input, and learns a density map representation for training a counter. The counter and generator are trained jointly within an end-to-end framework. In this paper, a residual regression framework is proposed for crowd counting harnessing the correlation information among samples. By incorporating such information into our network, we discover that more intrinsic characteristics can be learned by the network which thus generalizes better to unseen scenarios. Besides, we show how to effectively leverage the semantic prior to improve the performance of crowd counting. In this paper, we propose a deep neural network framework for multi-view crowd counting, which fuses information from multiple camera views to predict a scene-level density map on the ground-plane of the 3D world. We propose CNN-pixel and FCNN-skip to produce an original-resolution density map. In our experiments, we found that the lower-resolution density maps sometimes have better counting performance. In contrast, the original-resolution density maps improved localization tasks, such as detection and tracking, compared to bilinear upsampling the lower-resolution density maps. We utilize an image pyramid to deal with scale variations. What’s more, we adaptively fuse the predictions from different scales (using adaptively changing per-pixel weights), which makes our method adapt to scale changes within an image. We propose a crowd people tracking framework that fuses the generic visual object tracker with an estimated crowd density map using a convolutional neural network (CNN). Also, we design a Sparse Kernelized Correlation Filter (S-KCF) to suppress target response variations caused by occlusions and illumination changes, and spurious responses. In order to incorporate the available side information, we propose an adaptive convolutional neural network (ACNN), where the convolution filter weights adapt to the current scene context via the side information. We propose a novel object detection framework using object density maps for partially-occluded small instances, such as pedestrians in low resolution surveillance video. We propose an integer programming method for estimating the instantaneous count of pedestrians crossing a line of interest in a video sequence. We estimate the size of moving crowds in a privacy preserving manner, i.e. without people models or tracking. The system first segments the crowd by its motion, extracts low-level features from each segment, and estimates the crowd count in each segment using a Gaussian process. We classify traffic congestion in video by representing the video as a dynamic texture, and classifying it using an SVM with a probabilistic kernel (the KL kernel). The resulting classifier is robust to noise and lighting changes.

trajectories of multiple targets over the whole video.

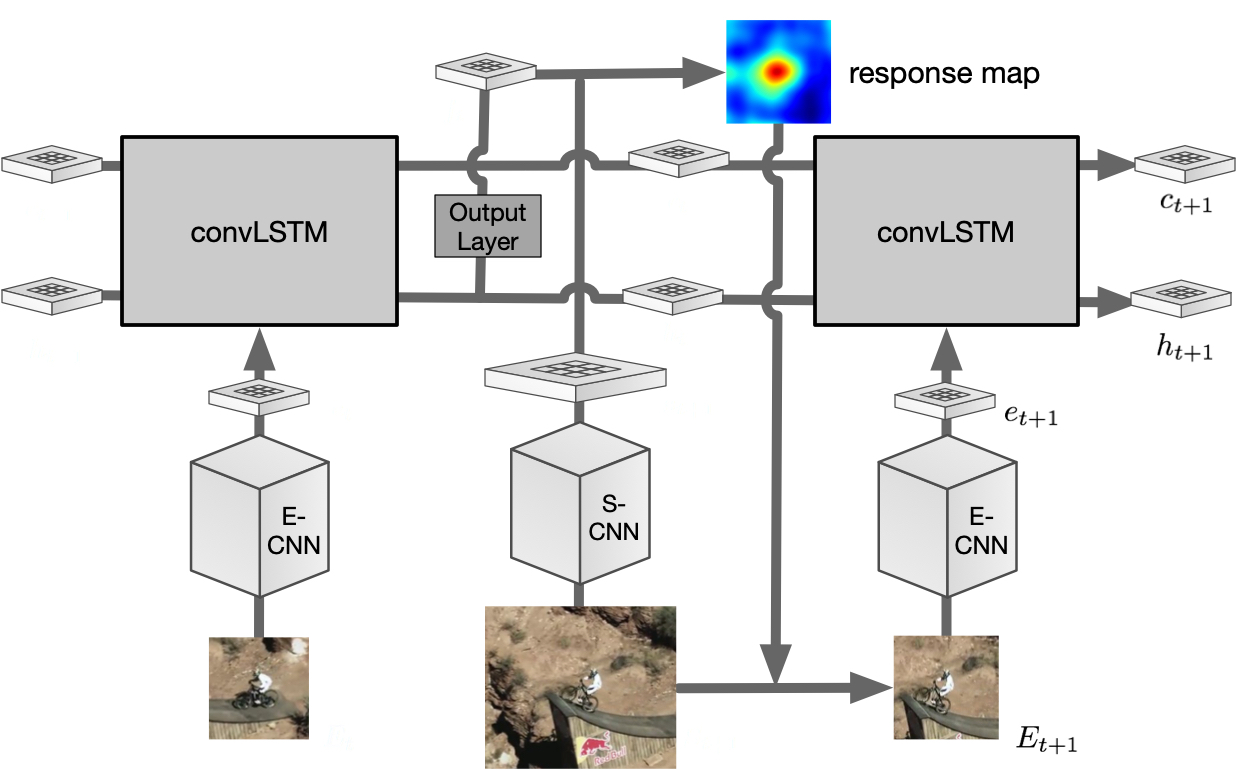

Visual Object Tracking & Segmentation

Localizing generic single objects in videos given the bounding box marked in the first frame.

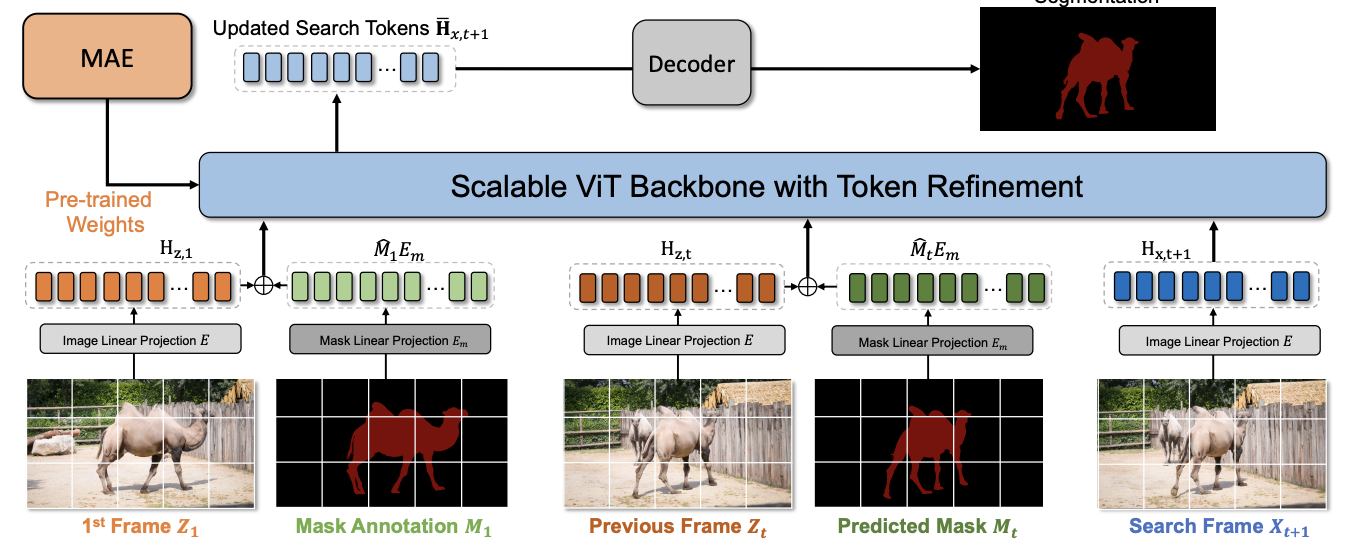

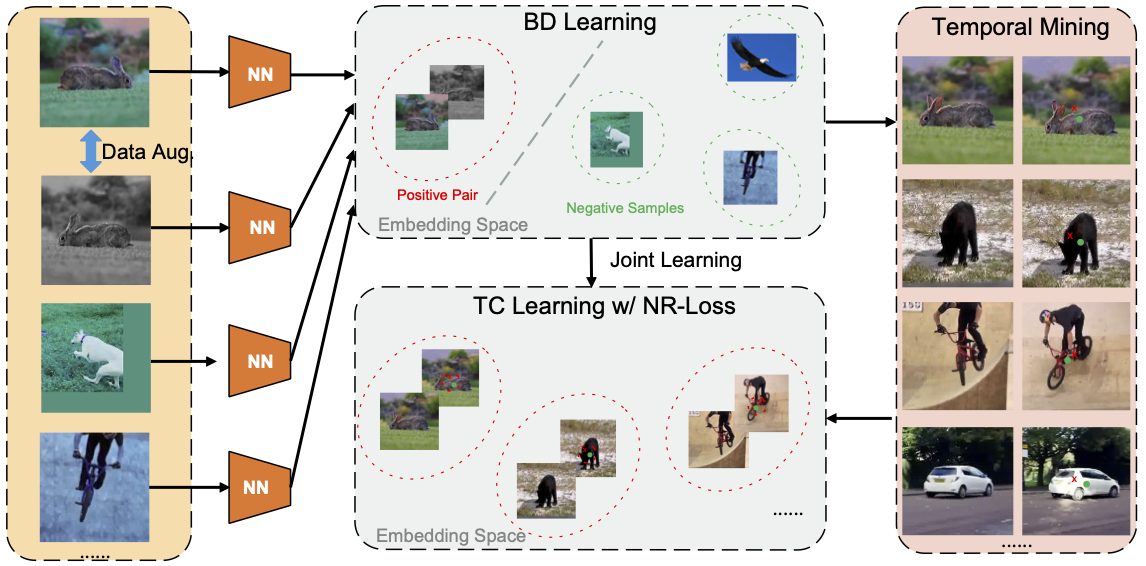

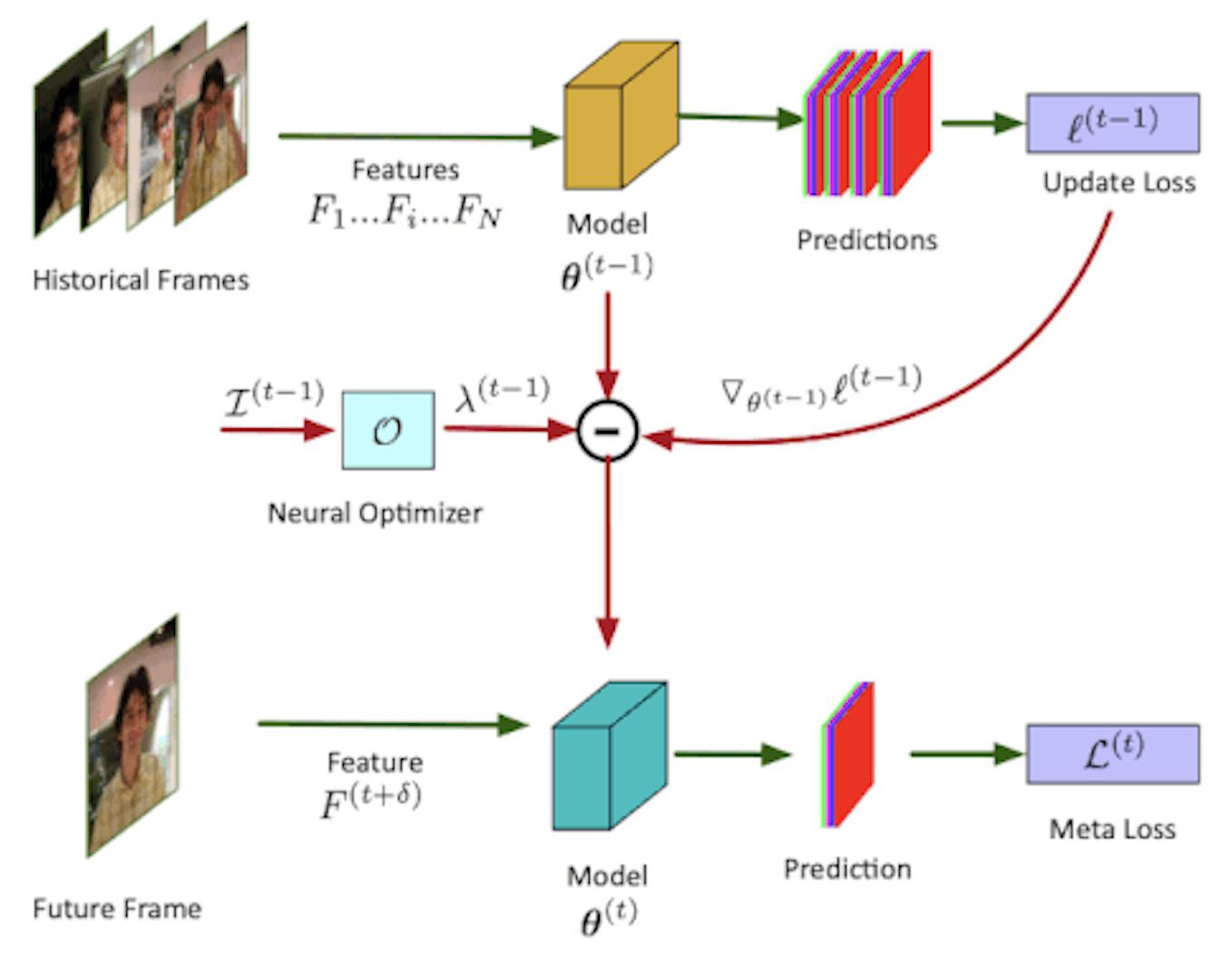

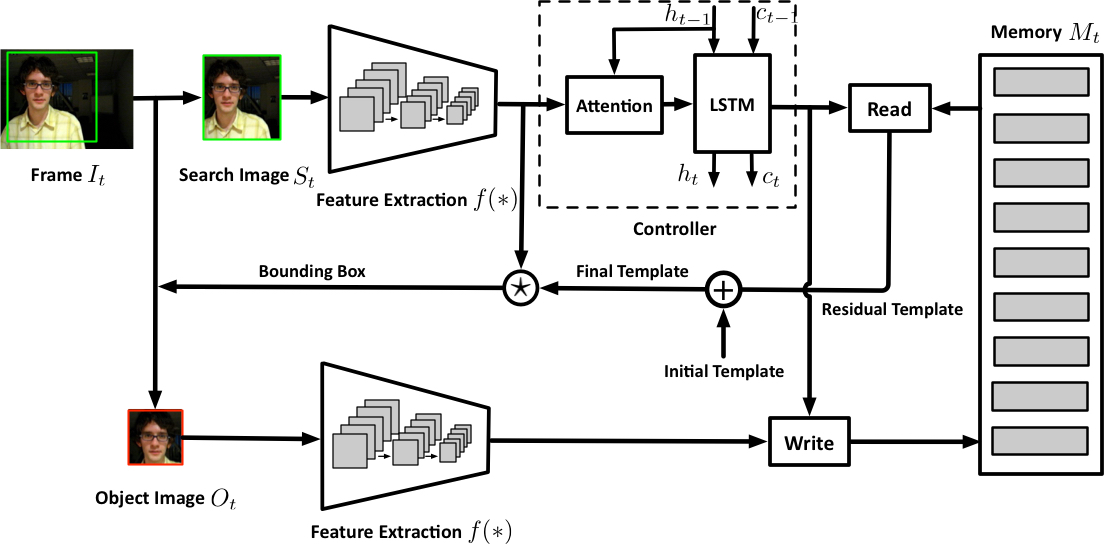

We propose a Simplified VOS framework (SimVOS), which removes the hand-crafted feature extraction and matching modules in previous approaches, to perform joint feature extraction and interaction via a single scalable transformer backbone. We also demonstrate that large-scale self-supervised pre-trained models can provide significant benefits to the VOS task. In addition, a new token refinement module is proposed to achieve a better speed-accuracy trade-off for scalable video object segmentation. We study masked autoencoder (MAE) pre-training on videos for matching-based downstream tasks, including visual object tracking (VOT) and video object segmentation (VOS). To reduce the human experts’ workload and improve the observation In this paper, we propose a novel meta-graph adaptation network (MGA-Net) to effectively adapt backbone feature extractors in existing deep trackers to a specific online tracking task. In this paper, we propose a progressive unsupervised learning (PUL) framework, which entirely removes the need for annotated training videos in visual tracking. We propose to offline train a recurrent neural optimizer to update a tracking model in a meta-learning setting, which can converge the model in a few gradient steps during online training. We propose a dynamic memory network to adapt the template to the target’s appearance variations during tracking where an LSTM is used to control the reading and writing process of the memory block. We propose a recurrent filter generation methods for visual tracking which directly feeds the target’s image patch to a recurrent neural network (RNN) to estimate an object-specific filter for tracking.

accuracy, in this paper, we develop a practical system to detect Chinese White Dolphins in the wild automatically.

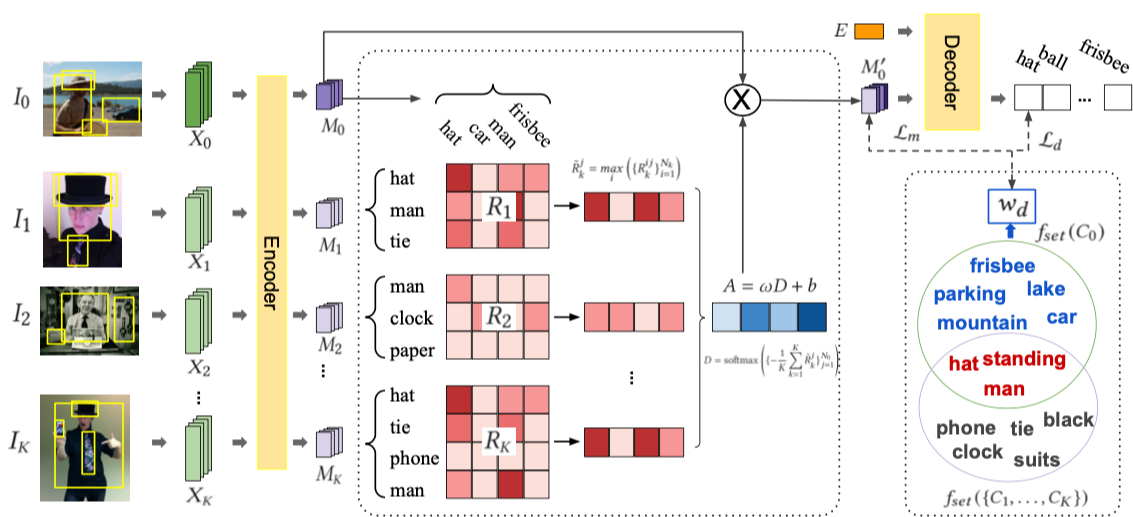

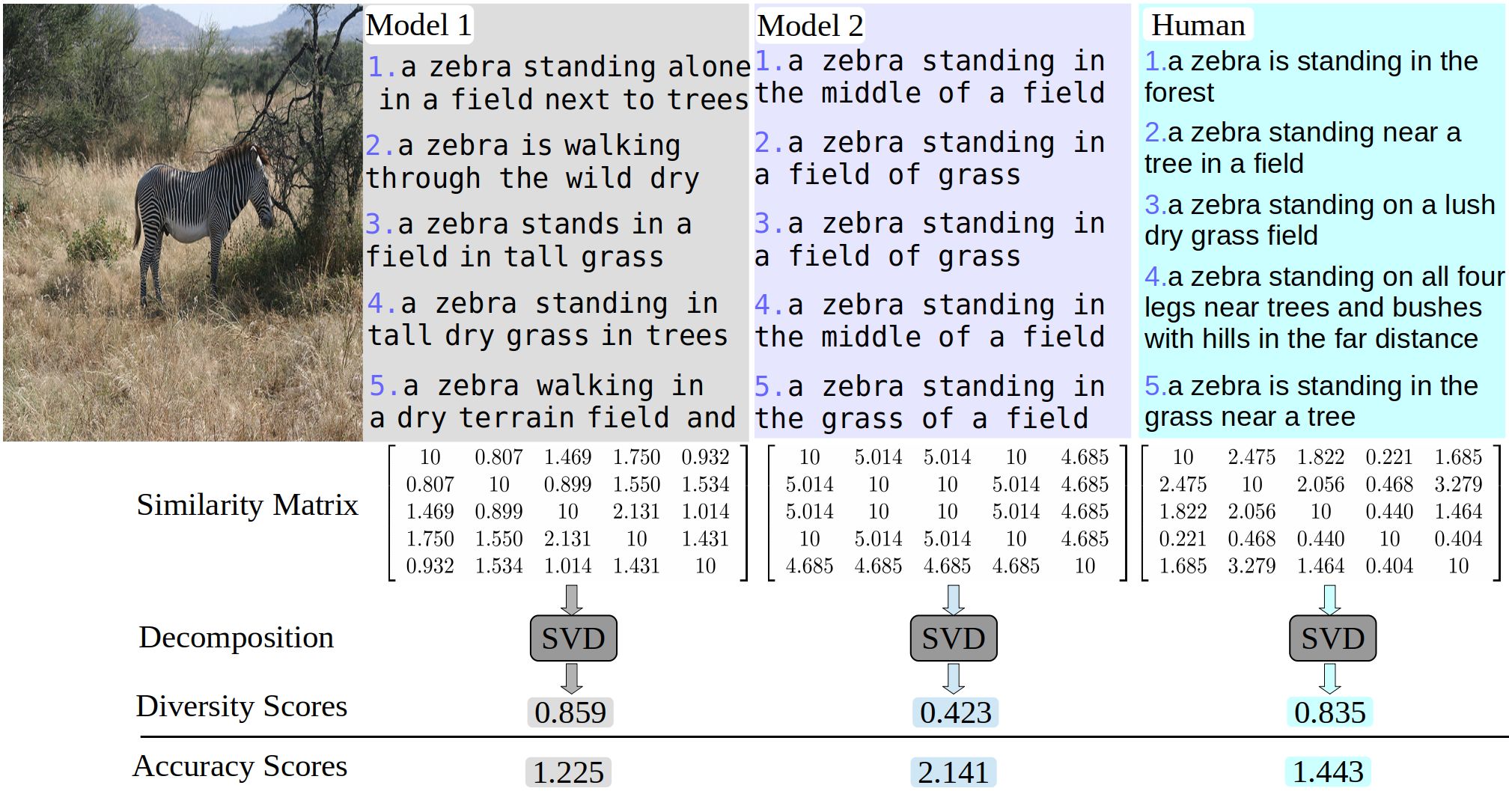

Image Captioning and Annotation

We propose a novel watermarking framework that leverages adversarial attacks to embed watermarks into images via two secret keys (network and signature) and deploys hypothesis tests to detect these watermarks with statistical guarantees. We improve the distinctiveness of image captions using a Group-based Distinctive Captioning Model (GdisCap), which compares each image with other images in one similar group and highlights the uniqueness of each image. To improve the distinctiveness of image captions, we first propose a metric, between-set CIDEr (CIDErBtw), to evaluate the distinctiveness of a caption with respect to those of similar images, and then propose several new training strategies for image captioning based on the new distinctiveness measure. In this project, we focus on the diversity of image captions. First, diversity metrics are proposed which is more correlated to human judgment. Second, we re-evaluate the existing models and find that (1) there is a large gap between human and the existing models in the diversity-accuracy space, (2) using reinforcement learning (CIDEr reward) to train captioning models leads to improving accuracy but reduce diversity. Third, we propose a simple but efficient approach to balance diversity and accuracy via reinforcement learning—using the linear combination of cross-entropy and CIDEr reward. RNN-based models dominate the field of image captioning, however, (1) RNNs have to be calculated step-by-step, which is not easily parallelized. (2) There is a long path between the start and end of the sentence using RNNs. Tree structures can make a shorter path, but trees require special processing. (3) RNNs only learn single-level representations at each time step, while convolutional decoders are able to learn multi-level representations of concepts, and each of them should corresponds to an image area, which should benefit word prediction. We annotate images using supervised multi-class labeling (SML), which treats semantic annotation as a multi-class classification problem. The system is scalable, and was applied to image databases with 60,000 images.

Explainable AI (XAI)

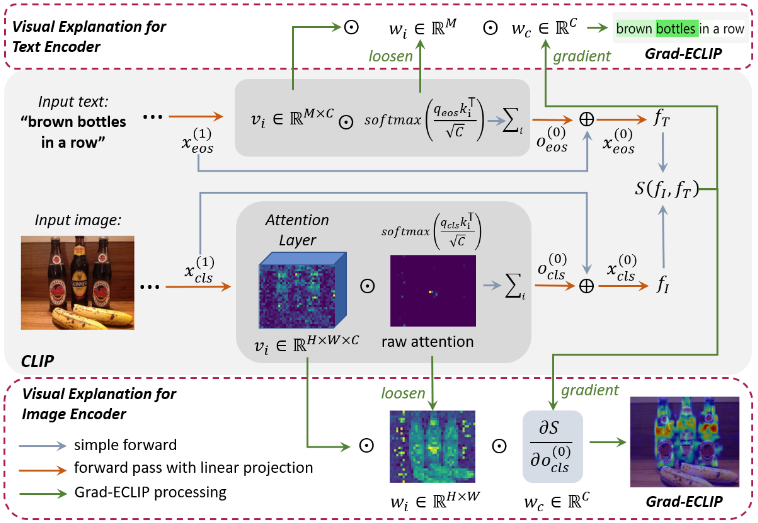

We propose a Gradient-based visual Explanation method for CLIP (Grad-ECLIP), which interprets the matching result of CLIP for specific input image-text pair

- , "Gradient-based Visual Explanation for Transformer-based CLIP." In: International Conference on Machine Learning (ICML), Vienna, Jul 2024.

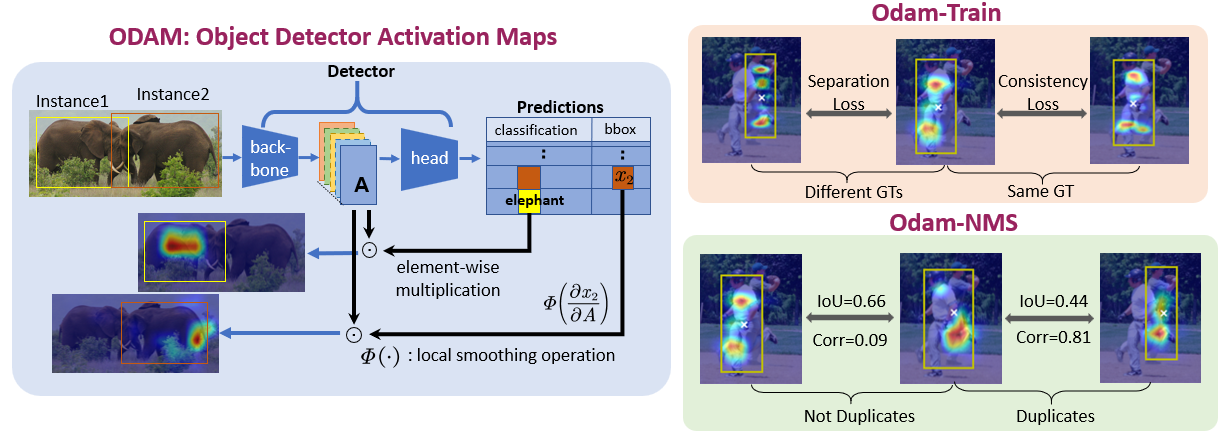

We propose the gradient-weighted Object Detector Activation Maps (ODAM), a visualized explanation technique for interpreting the predictions of object detectors, including class score and bounding box coordinates.

- , "ODAM: Gradient-based Instance-Specific Visual Explanations for Object Detection." In: Intl. Conf. on Learning Representations (ICLR), Rwanda, May 2023. [code]

- , "Gradient-based Instance-Specific Visual Explanations for Object Specification and Object Discrimination." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), to appear 2024.

Active Learning

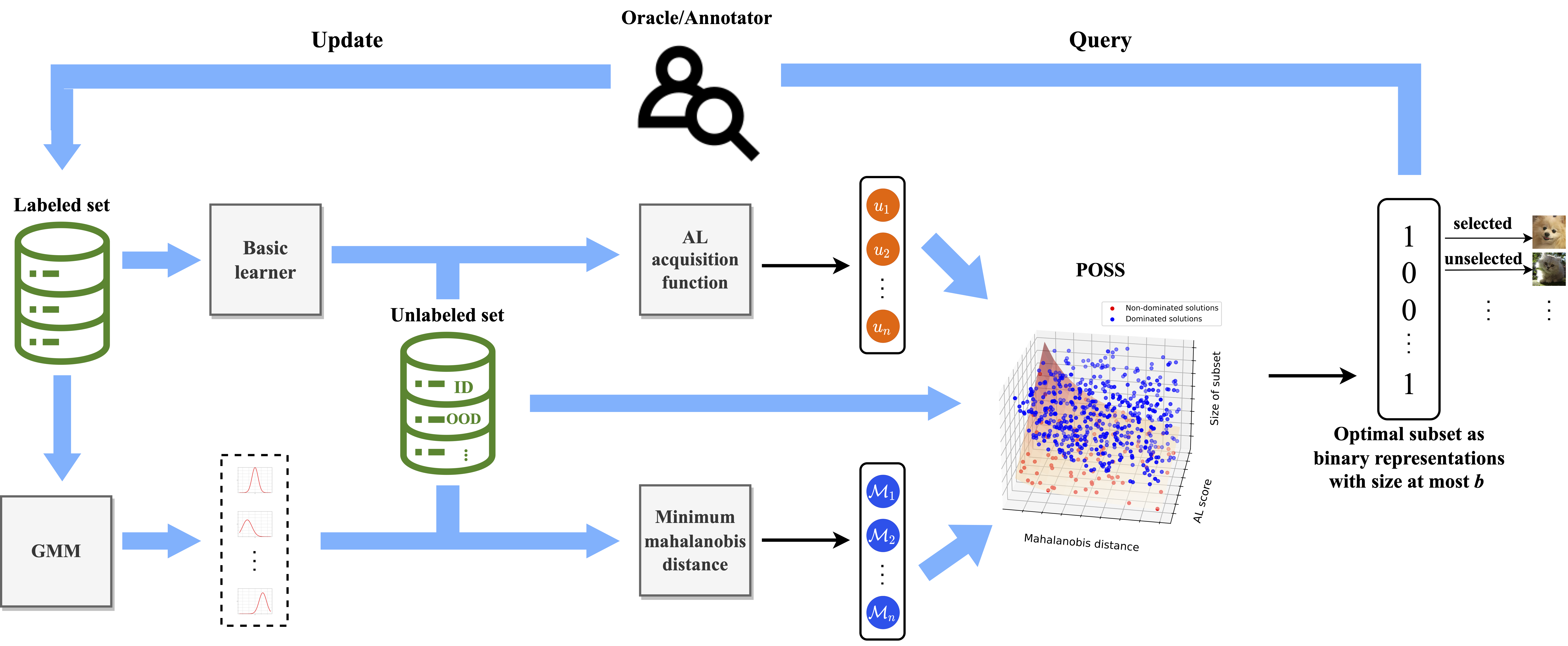

We propose a batch-mode Pareto Optimization Active Learning (POAL) framework for Active Learning under Out-of-Distribution data scenarios.

- , "Pareto Optimization for Active Learning under Out-of-Distribution Data Scenarios." Transactions on Machine Learning Research (TMLR), June 2023.

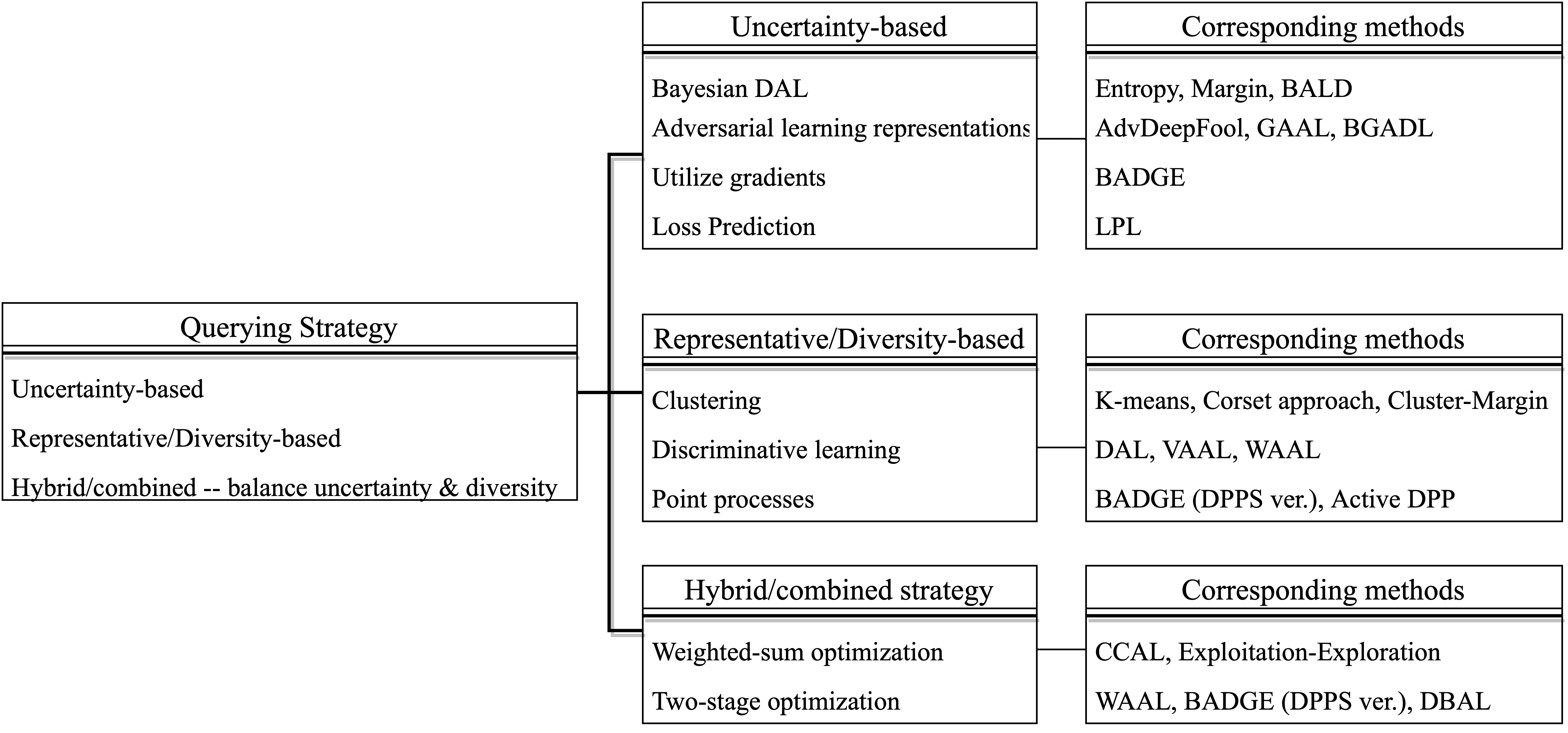

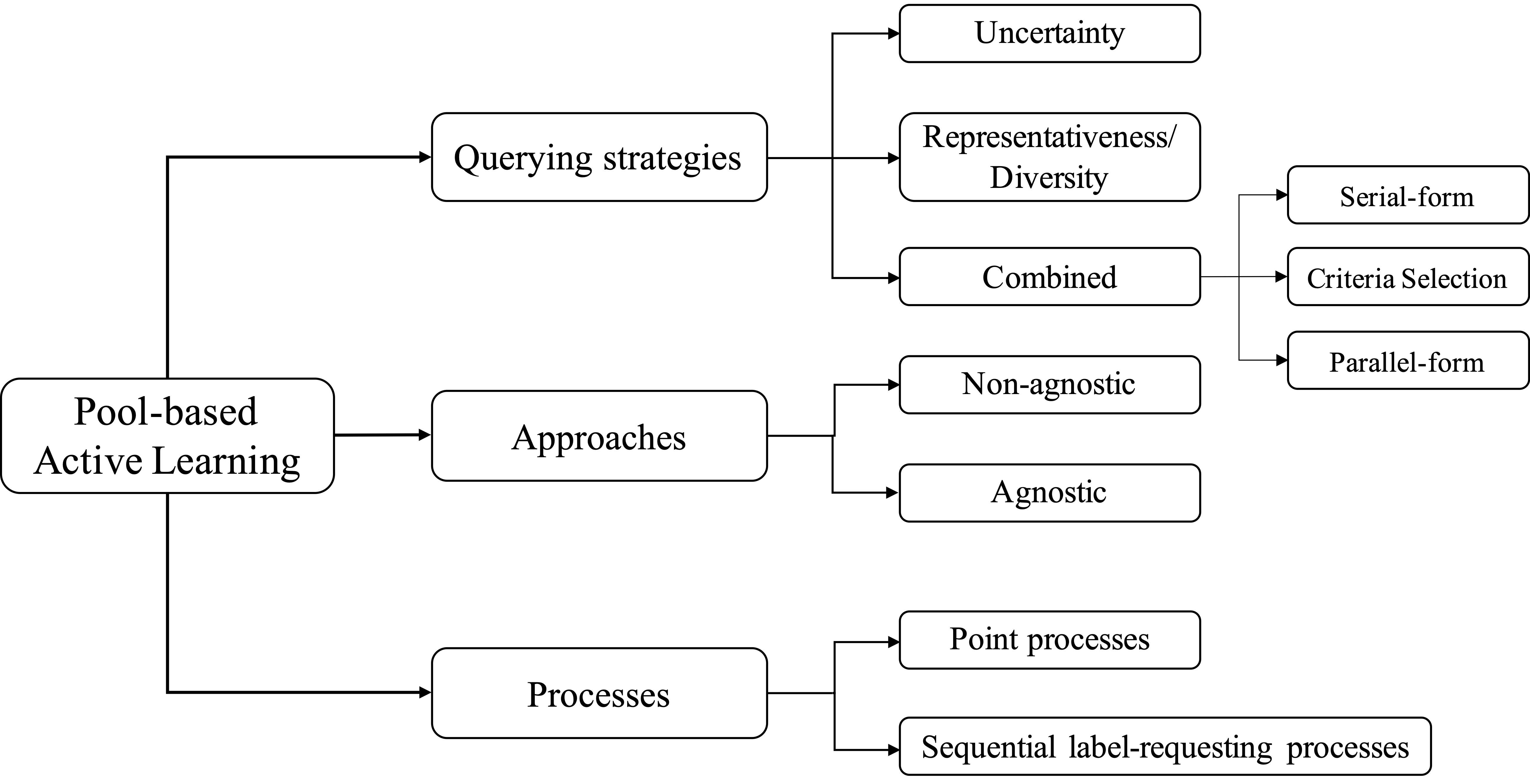

We present a comprehensive comparative survey of 19 Deep Active Learning approaches for classification tasks.

We introduce an active learning benchmark comprising 35 public datasets and experiment protocols, and evaluate 17 pool-based AL methods.

- , "A Comparative Survey: Benchmarking for Pool-based Active Learning." In: International Joint Conf. on Artificial Intelligence (IJCAI), Survey Track, Aug 2021.

Probabilistic Models and Machine Learning

We propose a novel tree structure variational Bayesian method to learn the individual model and group model simultaneously by treating the group models as the parents of individual models, so that the individual model is learned from observations and regularized by its parents, and conversely, the parent model will be optimized to best represent its children.

- , "Hierarchical Learning of Hidden Markov Models with Clustering Regularization." In: 37th Conference on Uncertainty in Artificial Intelligence (UAI), Jul 2021.

We propose a fully nested neural network (FN3) that runs only once to build a nested set of compressed/quantized models, which is optimal for different resource constraints. We then propose a Bayesian version that estimates the ordered dropout hyperparameter and has well calibrated uncertainty.

- , "Fully Nested Neural Network for Adaptive Compression and Quantization." In: International Joint Conf. on Artificial Intelligence (IJCAI), Yokohama, July 2020. [supplemental]

- , "Bayesian Nested Neural Networks for Uncertainty Calibration and Adaptive Compression." In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2021.

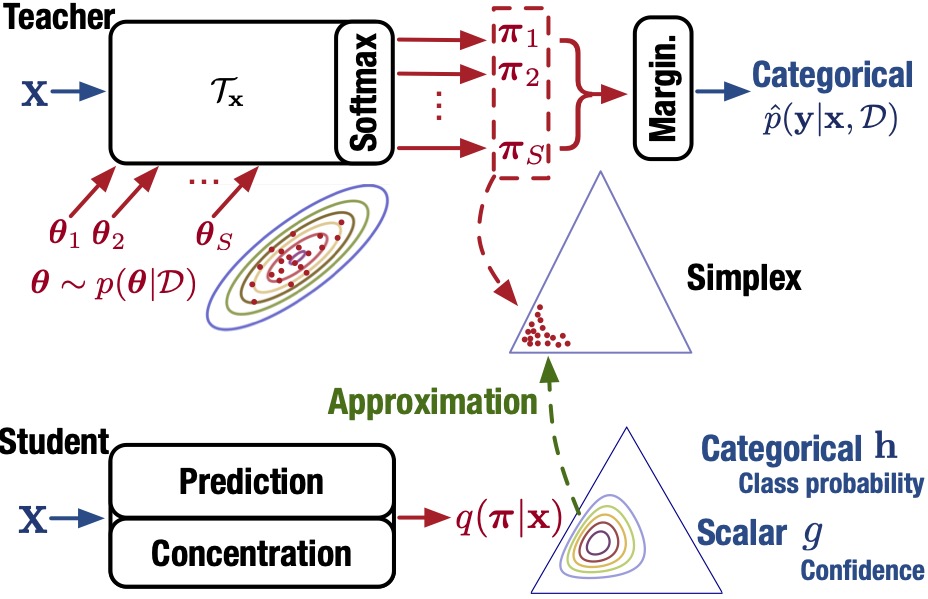

We propose a generic framework to approximate the output probability distribution induced by a Bayesian NN model posterior with a parameterized model and in an amortized fashion. The aim is to approximate the predictive uncertainty of a specific Bayesian model, meanwhile alleviating the heavy workload of MC integration at testing time.

- , "Accelerating Monte Carlo Bayesian Prediction via Approximating Predictive Uncertainty over the Simplex." IEEE Transactions on Neural Networks and Learning Systems (TNNLS), 33(4):1492-1506, Apr 2022 (online 2020).

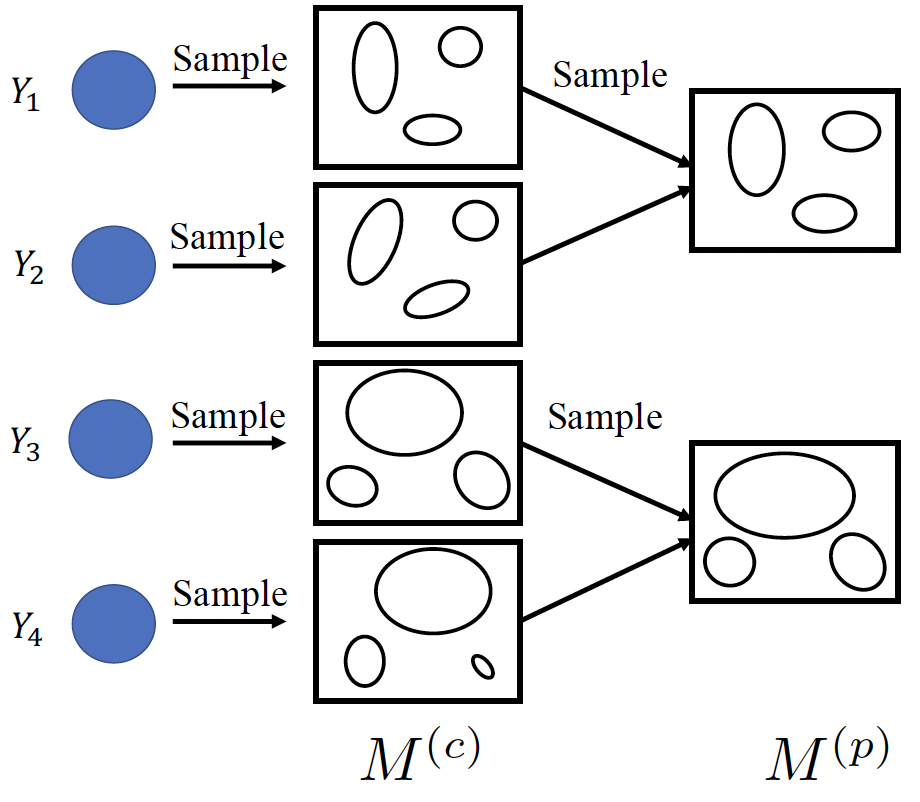

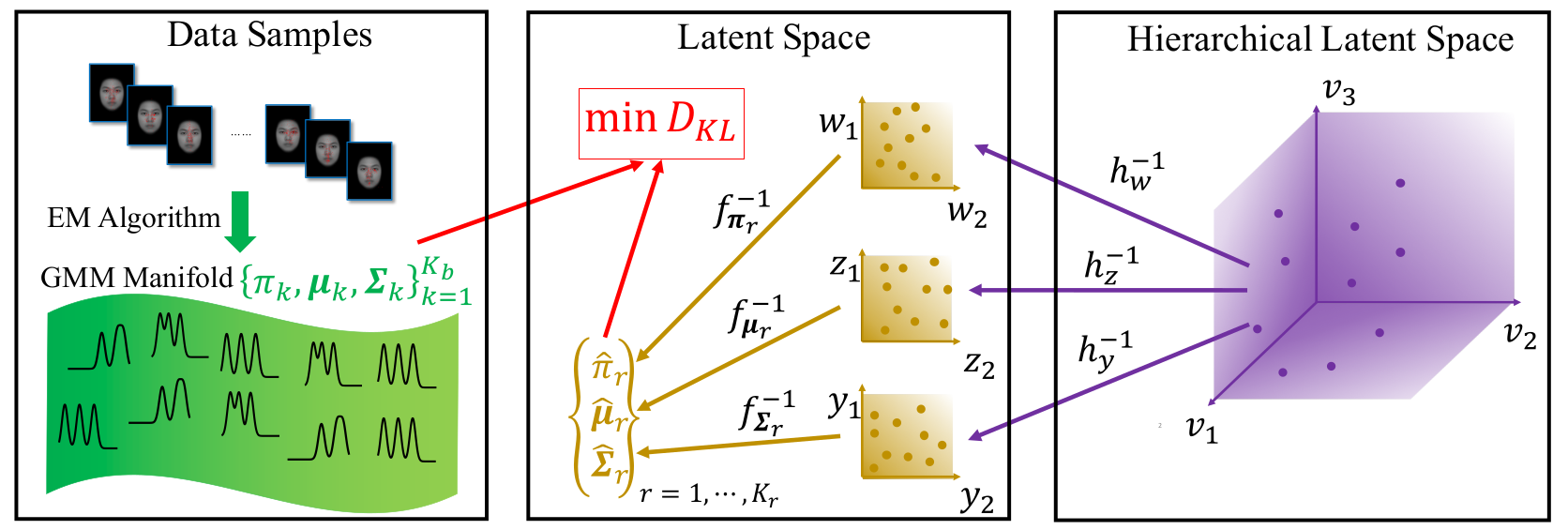

We propose a ParametRIc MAnifold Learning (PRIMAL) algorithm for Gaussian Mixtures Models (GMM), assuming that GMMs lie on or near to a manifold that is generated from a low-dimensional hierarchical latent space through parametric mappings. Inspired by Principal Component Analysis (PCA), the generative processes for priors, means and covariance matrices are modeled by

their respective latent space and parametric mapping.

- , "PRIMAL-GMM: PaRametrIc MAnifold Learning of Gaussian Mixture Models." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 44(6):3197-3211, June 2022 (online 2021). [code]

- , "Parametric Manifold Learning of Gaussian Mixture Models." In: International Joint Conference on Artificial Intelligence (IJCAI), Macau, Aug 2019.

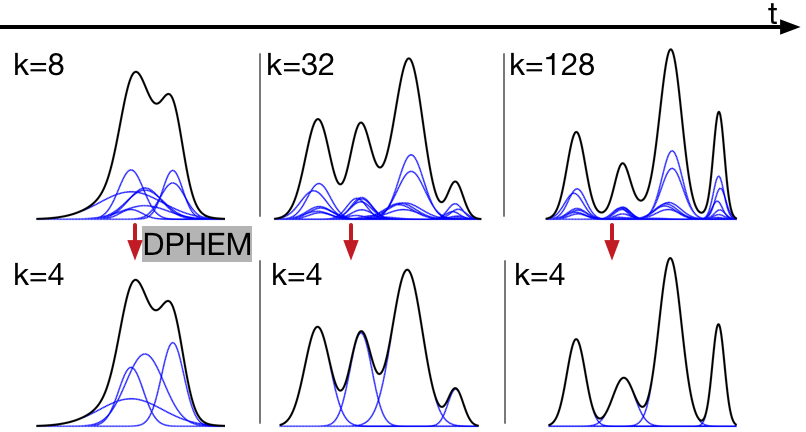

An algorithm is proposed to simplify the Gaussian Mixture Models into a reduced mixture model with fewer mixture components, by maximizing a variational lower bound of the expected log-likelihood of a set of virtual samples.

- , "Approximate Inference for Generic Likelihoods via Density-Preserving GMM Simplification." In: NIPS 2016 Workshop on Advances in Approximate Bayesian Inference, Barcelona, Dec 2016.

- , "Density-Preserving Hierarchical EM Algorithm: Simplifying Gaussian Mixture Models for Approximate Inference." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 41(6):1323-1337, June 2019.

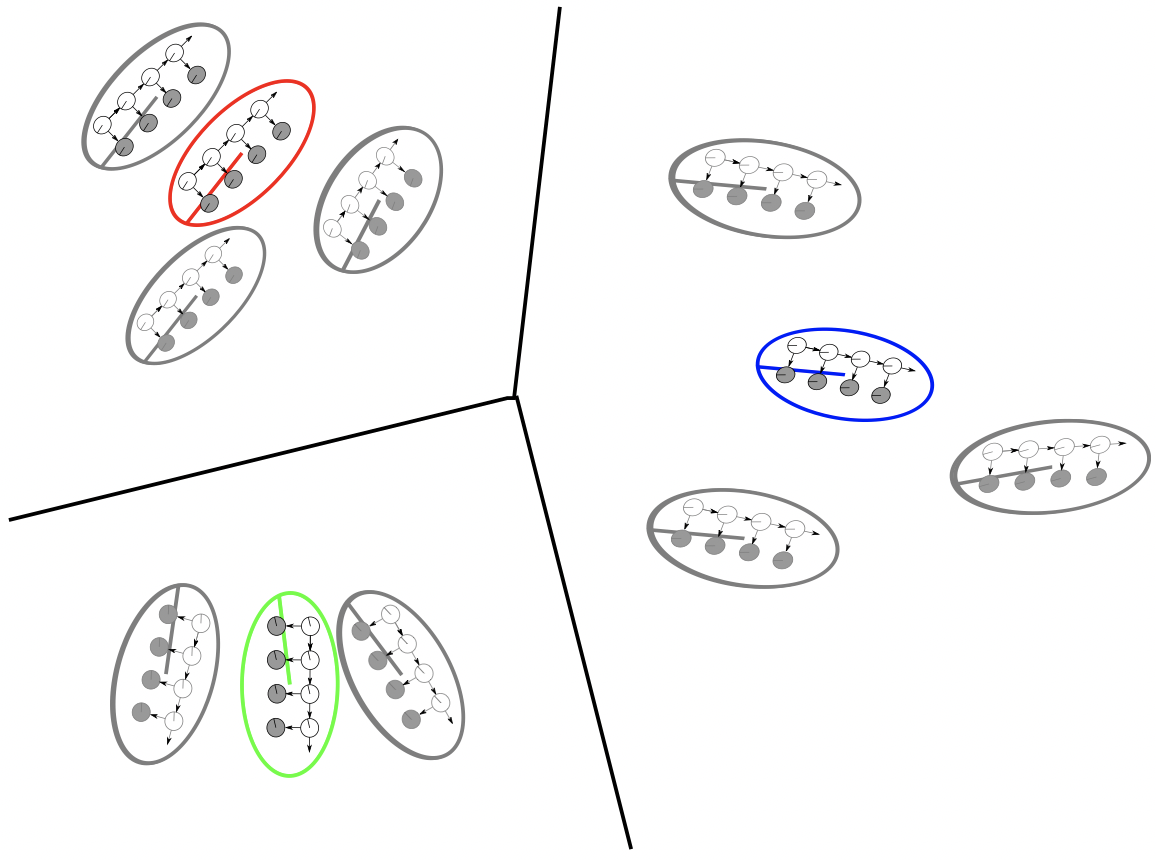

We propose a variational hierarchical EM algorithm for clustering hidden Markov models (HMMs), producing groups of similar HMMs and their representative HMM cluster centers. We also propose a variational Bayesian version that performs model selection.

- , "Clustering hidden Markov models with variational HEM." Journal of Machine Learning Research (JMLR), 15(2):697-747, Feb 2014. [code]

- , "Clustering Hidden Markov Models With Variational Bayesian Hierarchical EM." IEEE Trans. on Neural Networks and Learning Systems (TNNLS), 34(3):1537-1551, March 2023 (online 2021).

Eye-Gaze Analysis

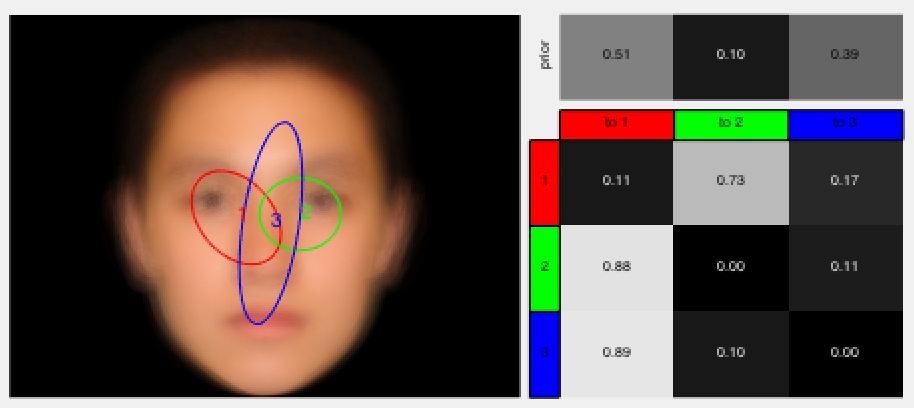

We model eye movements on faces through integrating deep neural networks and hidden Markov Models (DNN+HMM).

- , "Understanding the role of eye movement consistency in face recognition and autism through integrating deep neural networks and hidden Markov models." npj Science of Learning, 7:28, Oct 2022.

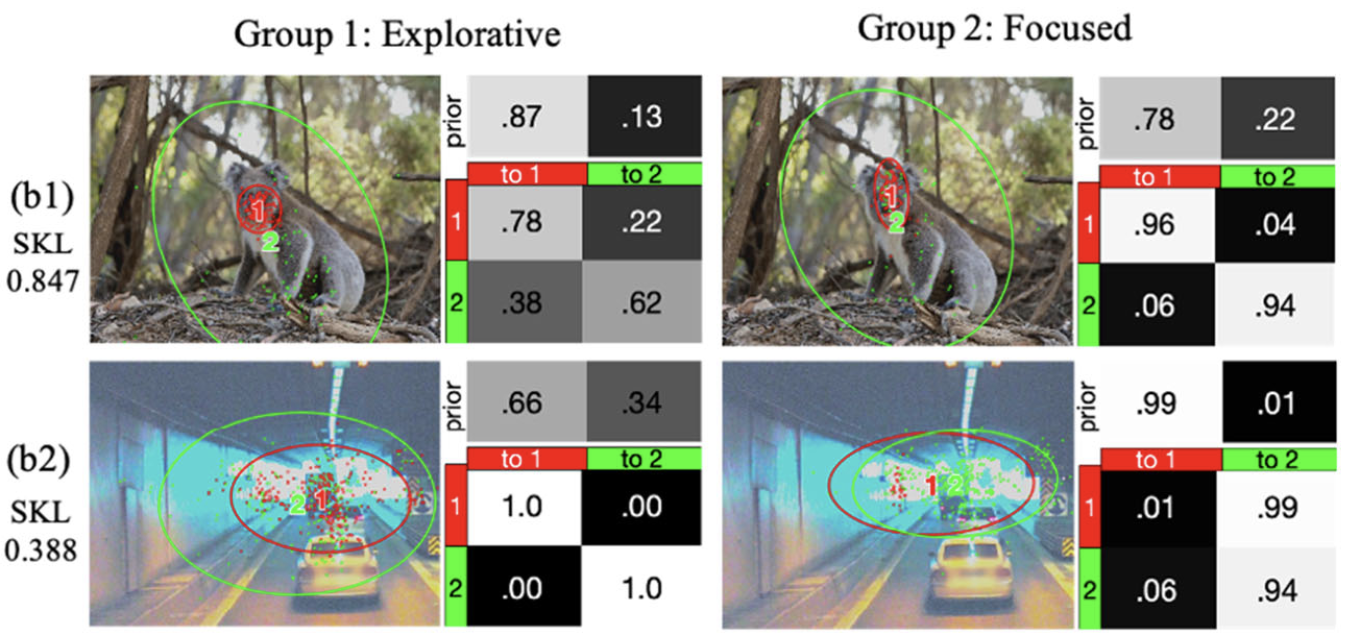

We analyze eye movement data on stimuli with different feature layouts. Through co-clustering HMMs, we discover common strategies on each stimuli and cluster subjects with similar strategies.

- , "Eye Movement analysis with Hidden Markov Models (EMHMM) with co-clustering." Behavior Research Methods, 53:2473-2486, April 2021.

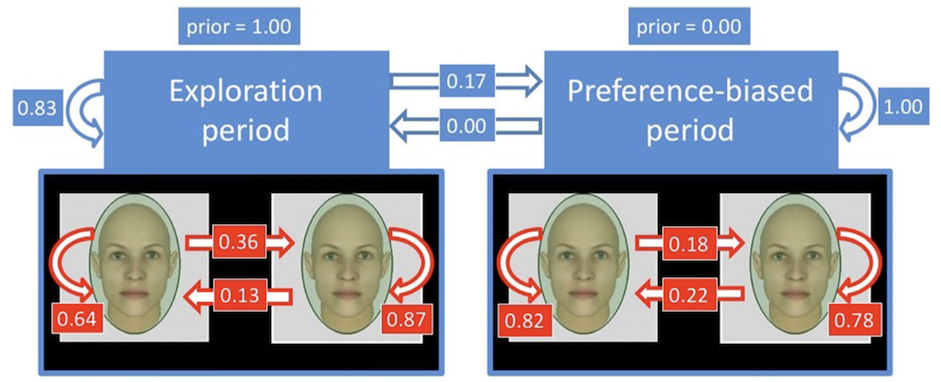

We use a switching hidden Markov model (EMSHMM) approach to analyze eye movement data in cognitive tasks involving cognitive state changes. A high-level state captures a participant’s cognitive state transitions during the task, and eye movement patterns during each high-level state are summarized with a regular HMM.

- , "Eye movement analysis with switching hidden Markov models." Behavior Research Methods, 52:1026-1043, June 2020. [appendix]

We use hidden Markov models (HMMs) to analyze eye movement data. A person’s eye fixation sequence is summarized with an HMM, and common strategies among people are discovered by clustering HMMs.

- , "Understanding eye movements in face recognition using hidden Markov models." Journal of Vision, 14(11):8, Sep 2014.

Data-Driven Computer Graphics

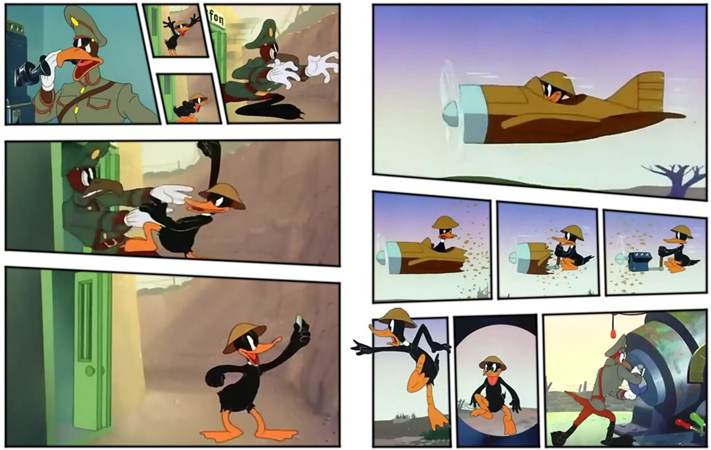

We propose a method for animating still manga imagery through camera movements, driven by motion and emotion semantics automatically extracted from the manga.

- , "DynamicManga: Animating Still Manga via Camera Movement." IEEE Trans. on Multimedia (TMM), 19(1):160-172, Jan 2017. [supplemental | video]

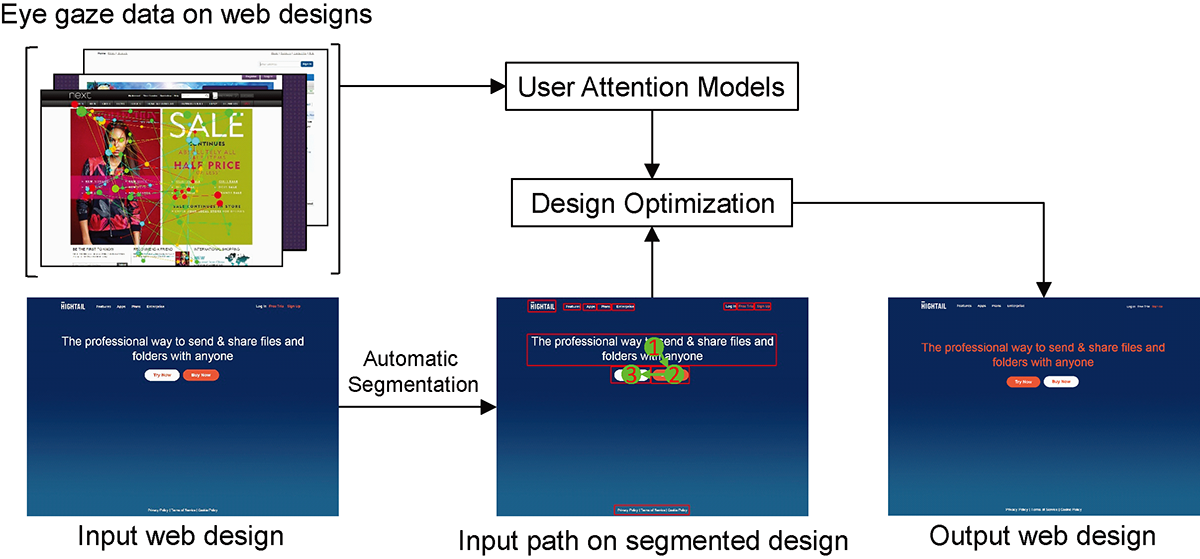

We present an approach that allows web designers to easily direct user attention via visual flow on web designs.

- , "Directing User Attention via Visual Flow on Web Designs." ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2016), Dec 2016. [supplemental | video]

We propose an approach for novices to synthesize a composition of panel elements that can effectively guide the reader’s attention to convey the story.

- , "Look Over Here: Attention-Directing Composition of Manga Elements." ACM Transactions on Graphics (Proc. SIGGRAPH 2014), Aug 2014. [supplemental | video | slides]

We propose an approach to automatically produce a manga layout from a set of input artworks, which is based on a generative layout model and parametric style models.

- , "Automatic Stylistic Manga Layout." ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2012), Singapore, Nov 2012. [supplemental | video | slides]

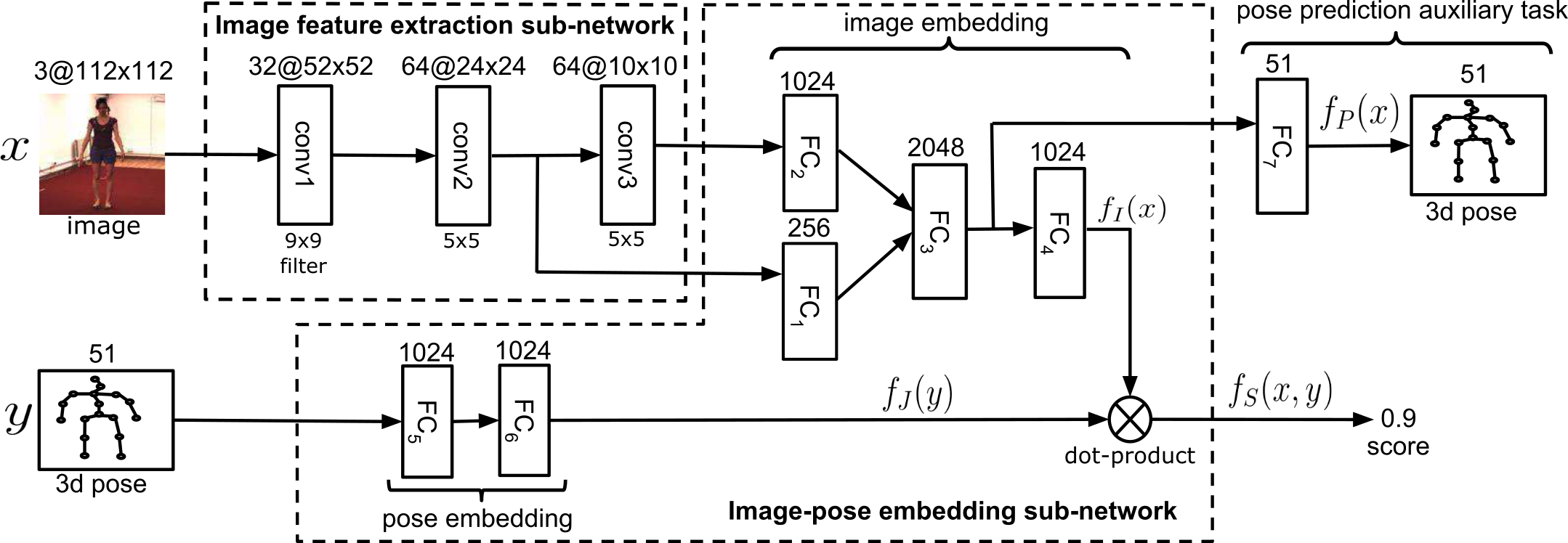

Human Pose Recognition and Tracking

Recognizing and tracking 2D and 3D human pose in images and videos.

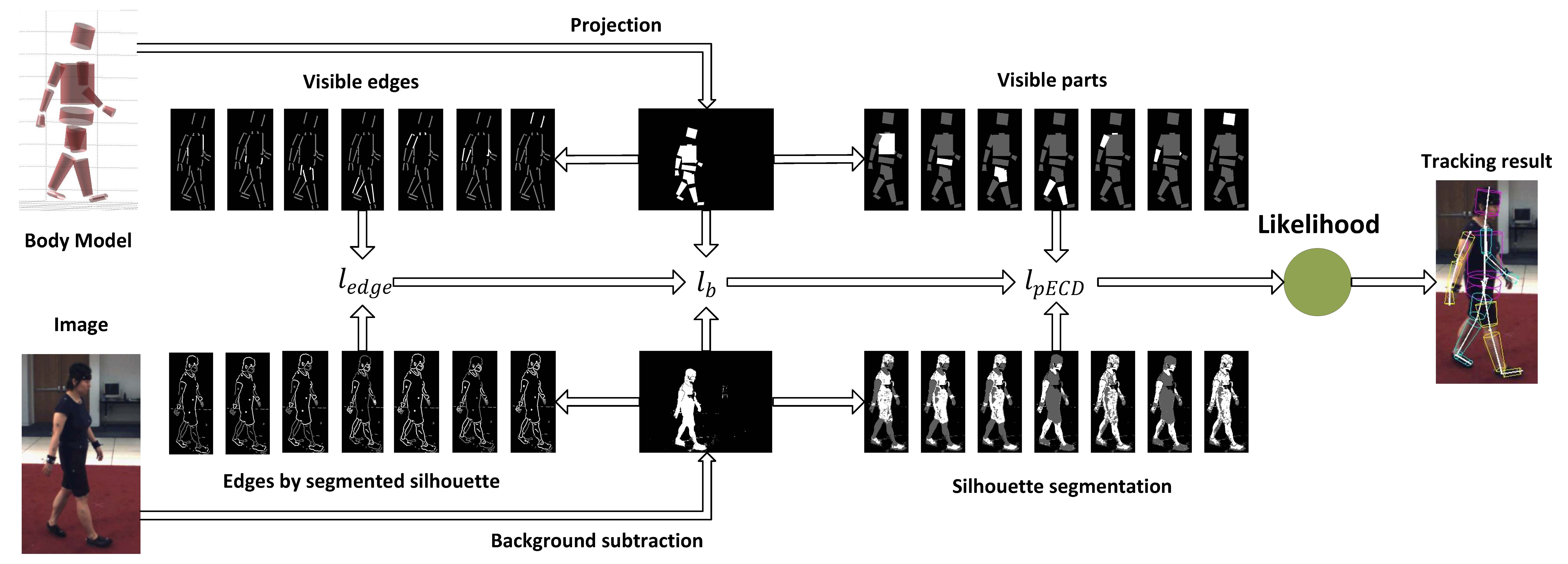

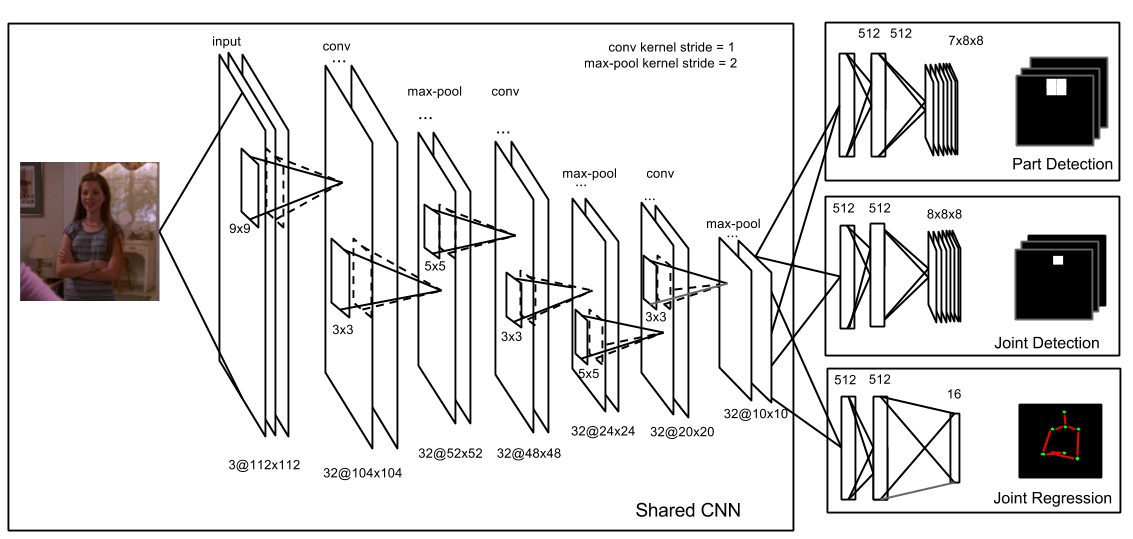

We collect a multi-view and stereo-depth dataset for 3D human pose estimation, which consists of challenging martial arts actions (Tai-chi and Karate), dancing actions (hip-hop and jazz), and sports actions (basketball, volleyball, football, rugby, tennis and badminton). We propose a maximum-margin structured learning framework with deep neural network that learns the image-pose score function for human pose estimation. We propose a robust likelihood function for 3D human pose tracking, which is robust to small pose changes and better able to localize partially occluded and overlapping parts. We propose a heterogeneous multi-task learning framework for 2D human pose estimation from monocular images using a deep convolutional neural network that combines pose regression and part detection. We also extend the model to 3D human pose estimation.

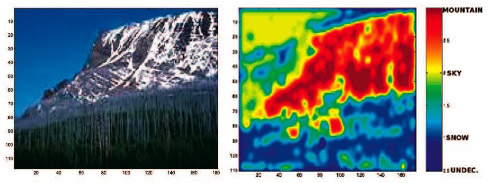

Dynamic Texture Models

A family of generative stochastic dynamic texture models for analyzing motion in video, and time-series in general (project overview).

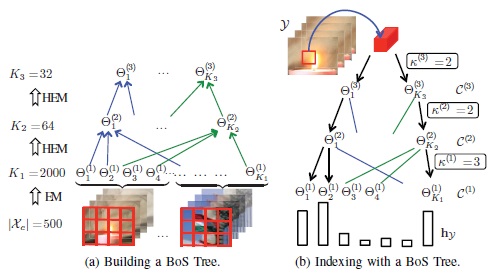

We propose the BoSTree that enables efficient mapping of videos to the bag-of-systems (BoS) codebook using a tree-structure, which enables the practical use of larger, richer codebooks.

- , "A Scalable and Accurate Descriptor for Dynamic Textures using Bag of System Trees." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 37(4):697-712, Apr 2015. [appendix]

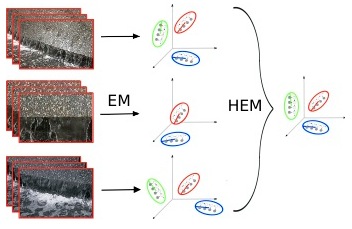

We propose a hierarchical EM algorithm capable of clustering dynamic texture models and learning novel cluster centers that are representative of the cluster members. DT clustering can be applied to semantic motion annotation and bag-of-systems codebook generation.

- , "Clustering Dynamic Textures with the Hierarchical EM Algorithm for Modeling Video." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 35(7):1606-1621, Jul 2013. [appendix]

The background model is based on a generalization of the Stauffer-Grimson background model, where each mixture component is a dynamic texture. We derive an on-line algorithm for updating the parameters using a set of sufficient statistics of the model.

- , "Generalized Stauffer-Grimson background subtraction for dynamic scenes." Machine Vision and Applications, 22(5):751-766, Sep 2011.

One disadvantage of the dynamic texture is its inability to account for multiple co-occuring textures in a single video. We extend the dynamic texture to a multi-state (layered) dynamic texture that can learn regions containing different dynamic textures.

- , "Layered dynamic textures." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 31(10):1862-1879, Oct 2009.

We introduce the mixture of dynamic textures, which models a collection of video as samples from a set of dynamic textures. We use the model for video clustering and motion segmentation.

- , "Modeling, clustering, and segmenting video with mixtures of dynamic textures." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 30(5):909-926, May 2008.

We introduce a kernelized dynamic texture, which has a non-linear observation function learned with kernel PCA. The new texture model can account for more complex patterns of motion, such as chaotic motion (e.g. boiling water and fire) and camera motion (e.g. panning and zooming), better than the original dynamic texture.

- , "Classifying Video with Kernel Dynamic Textures." In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, Jun 2007.

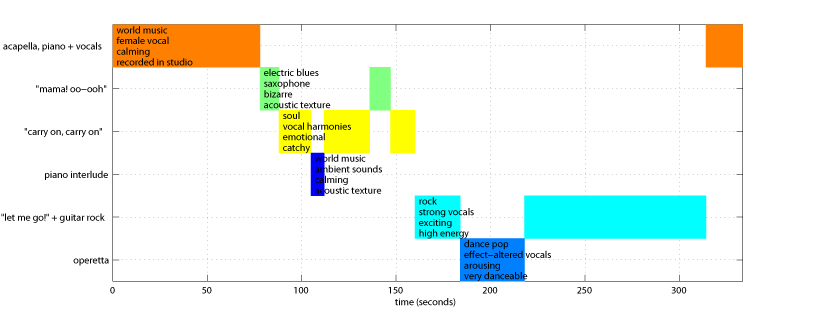

Music Analysis

We propose an approach to automatic music annotation and retrieval that is based on the dynamic texture mixture, a generative time series model of musical content. The new annotation model better captures temporal (e.g., rhythmical) aspects as well as timbral content. We model a time-series of audio feature vectors, extracted from a short audio fragment, as a dynamic texture. The musical structure of a song (e.g. chorus, verse, and bridge) is discovered by segmenting the song using the mixture of dynamic textures. The song segmentations are used for song retrieval, song annotation, and database visualization.