About

Welcome to the Video, Image, and Sound Analysis Lab (VISAL) at the City University of Hong Kong! The lab is directed by Prof. Antoni Chan in the Department of Computer Science.

Our main research activities include:

- Computer Vision, Surveillance

- Machine Learning, Pattern Recognition

- Computer Audition, Music Information Retrieval

- Eye Gaze Analysis

For more information about our current research, please visit the projects and publication pages.

Opportunities for graduate students and research assistants – if you are interested in joining the lab, please check this information.

Latest News [more]

- [May 27, 2025]

Congratulations to Chenyang for defending her thesis!

- [Feb 11, 2025]

Congratulations to Jiuniu for defending his thesis!

- [Apr 9, 2024]

Congratulations to Qiangqiang for defending his thesis!

- [Jun 16, 2023]

Congratulations to Hui for defending her thesis!

Recent Publications [more]

- Large language model tokens are psychologically salient.

,

In: Annual Conference of the Cognitive Science Society (CogSci), San Francisco, to appear Jul 2025. - Whose Values Prevail? Bias in Large Language Model Value Alignment.

,

In: Annual Conference of the Cognitive Science Society (CogSci), San Francisco, to appear Jul 2025. - Eye movement behavior during mind wandering in older adults.

,

In: Annual Conference of the Cognitive Science Society (CogSci), San Francisco, to appear Jul 2025. - DistinctAD: Distinctive Audio Description Generation in Contexts.

,

In: IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), to appear 2025 (highlight). - Point-to-Region Loss for Semi-Supervised Point-Based Crowd Counting.

,

In: IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), To appear 2025 (highlight). - Advancing Multiple Instance Learning with Continual Learning for Whole Slide Imaging.

,

In: IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), to appear 2025 (highlight). - Speaker’s Use of Mental Verbs to Convey Belief States: A Comparison between Humans and Large Language Model (LLM) .

,

In: 19th International Pragmatics Conference, Brisbane, Jun 2025. - Collaborative contrastive learning for cross-domain gaze estimation.

,

Pattern Recognition, 161:111244, May 2025. - Proximal Mapping Loss: Understanding Loss Functions in Crowd Counting & Localization.

,

In: Intl. Conf. on Learning Representations (ICLR), Singapore, Apr 2025. - Another Perspective of Over-Smoothing: Alleviating Semantic Over-Smoothing in Deep GNNs.

,

IEEE Transactions on Neural Networks and Learning Systems (TNNLS), 36(4):6897-6910, Apr 2025 (online May 2024).

Recent Project Pages [more]

We propose a novel watermarking framework that leverages adversarial attacks to embed watermarks into images via two secret keys (network and signature) and deploys hypothesis tests to detect these watermarks with statistical guarantees.

- , "A Secure Image Watermarking Framework with Statistical Guarantees via Adversarial Attacks on Secret Key Networks." In: European Conference on Computer Vision (ECCV), Milano, Oct 2024. [supplemental]

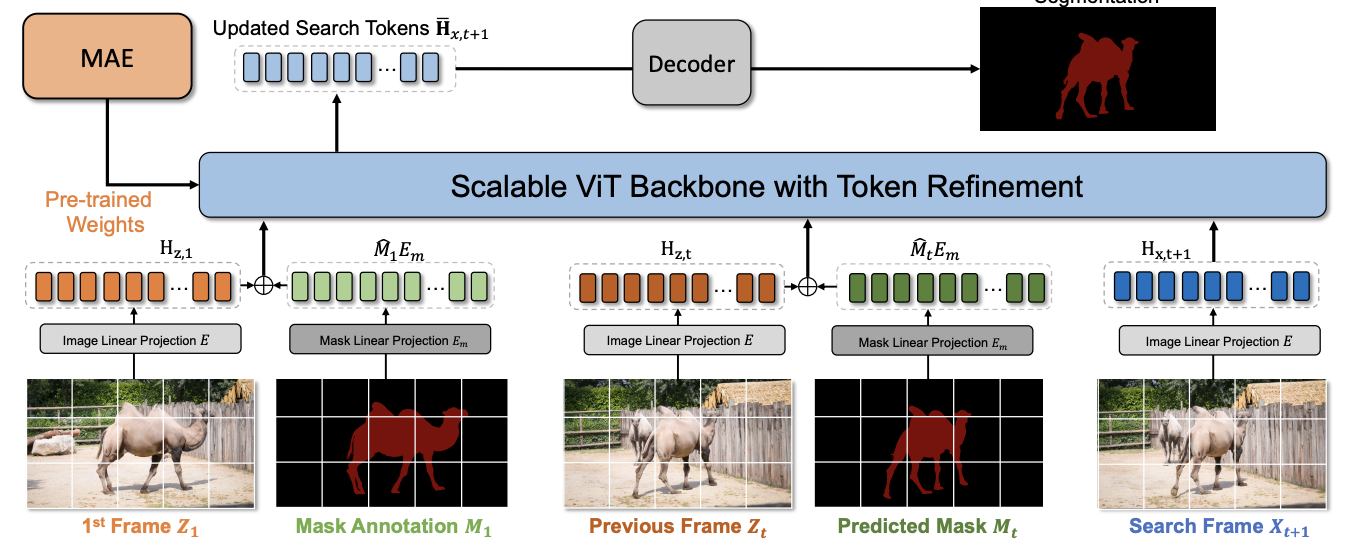

We propose a Simplified VOS framework (SimVOS), which removes the hand-crafted feature extraction and matching modules in previous approaches, to perform joint feature extraction and interaction via a single scalable transformer backbone. We also demonstrate that large-scale self-supervised pre-trained models can provide significant benefits to the VOS task. In addition, a new token refinement module is proposed to achieve a better speed-accuracy trade-off for scalable video object segmentation.

- , "Scalable Video Object Segmentation with Simplified Framework." In: International Conf. Computer Vision (ICCV), Paris, Oct 2023. [code]

We study masked autoencoder (MAE) pre-training on videos for matching-based downstream tasks, including visual object tracking (VOT) and video object segmentation (VOS).

- , "DropMAE: Masked Autoencoders with Spatial-Attention Dropout for Tracking Tasks." In: IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), Jun 2023. [code]

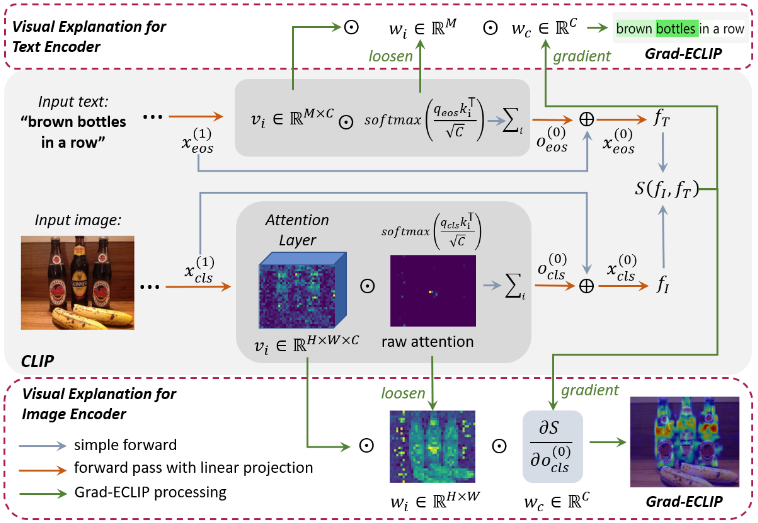

We propose a Gradient-based visual Explanation method for CLIP (Grad-ECLIP), which interprets the matching result of CLIP for specific input image-text pair

- , "Gradient-based Visual Explanation for Transformer-based CLIP." In: International Conference on Machine Learning (ICML), Vienna, Jul 2024.

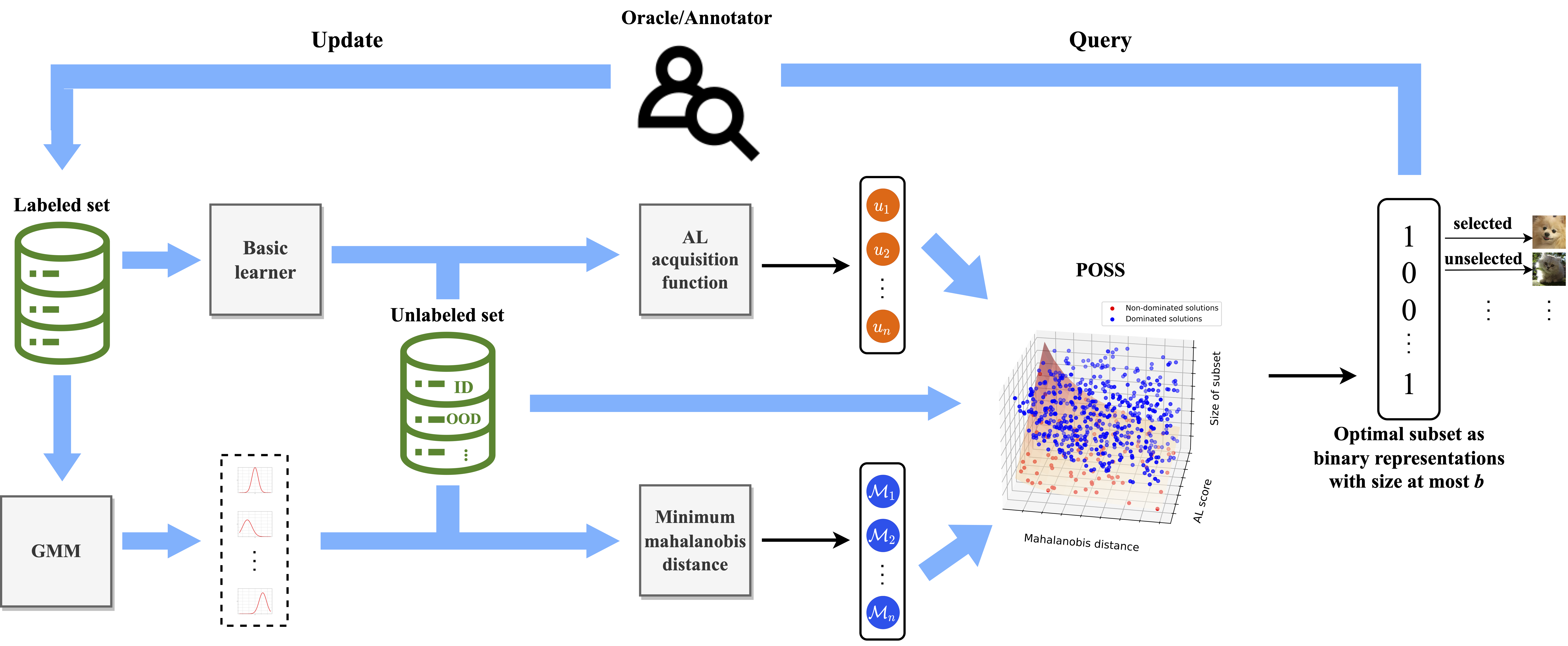

We propose a batch-mode Pareto Optimization Active Learning (POAL) framework for Active Learning under Out-of-Distribution data scenarios.

- , "Pareto Optimization for Active Learning under Out-of-Distribution Data Scenarios." Transactions on Machine Learning Research (TMLR), June 2023.

Recent Datasets and Code [more]

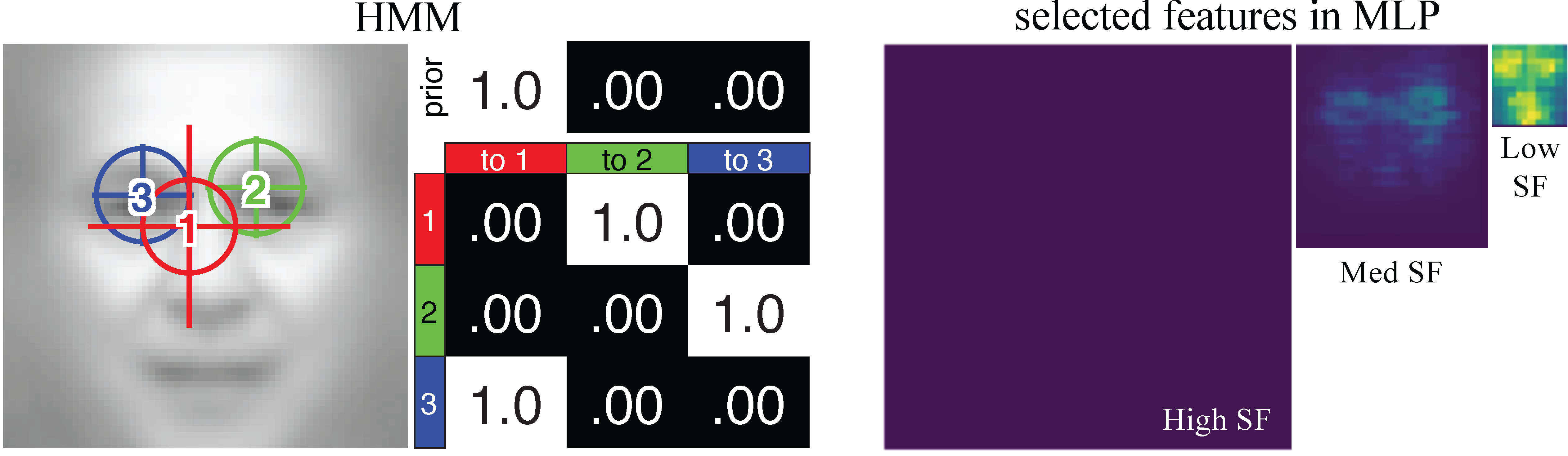

Modeling Eye Movements with Deep Neural Networks and Hidden Markov Models (DNN+HMM)

This is the toolbox for modeling eye movements and feature learning with deep neural networks and hidden Markov models (DNN+HMM).

- Files: download here

- Project page

- If you use this toolbox please cite:

Understanding the role of eye movement consistency in face recognition and autism through integrating deep neural networks and hidden Markov models.

,

npj Science of Learning, 7:28, Oct 2022.

Dolphin-14k: Chinese White Dolphin detection dataset

A dataset consisting of Chinese White Dolphin (CWD) and distractors for detection tasks.

- Files: Google Drive, Readme

- Project page

- If you use this dataset please cite:

Chinese White Dolphin Detection in the Wild.

,

In: ACM Multimedia Asia (MMAsia), Gold Coast, Australia, Dec 2021.

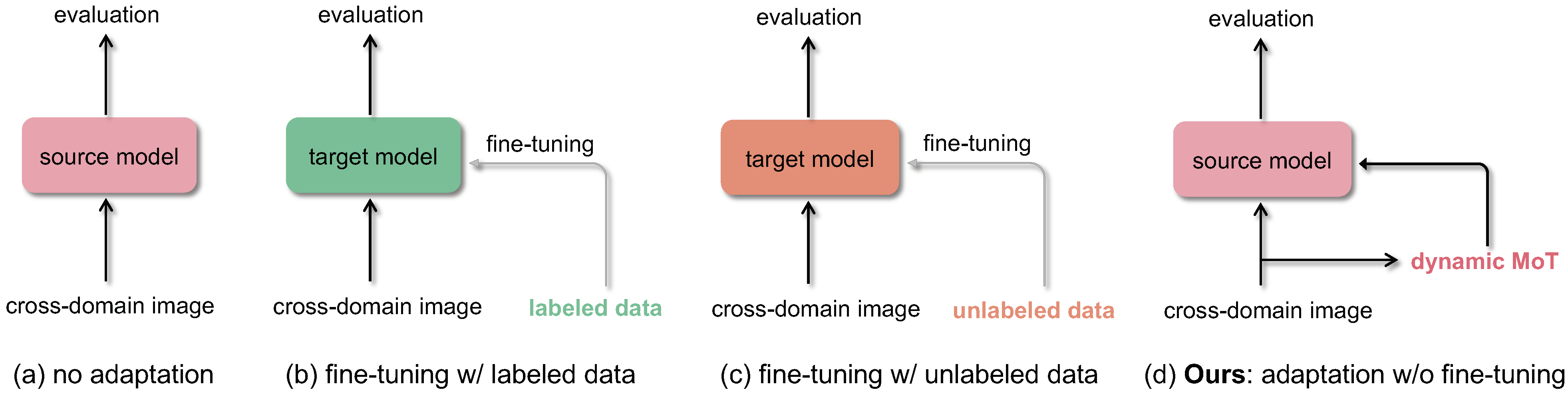

Crowd counting: Zero-shot cross-domain counting

Generalized loss function for crowd counting.

- Files: github

- Project page

- If you use this toolbox please cite:

Dynamic Momentum Adaptation for Zero-Shot Cross-Domain Crowd Counting.

,

In: ACM Multimedia (MM), Oct 2021.

CVCS: Cross-View Cross-Scene Multi-View Crowd Counting Dataset

Synthetic dataset for cross-view cross-scene multi-view counting. The dataset contains 31 scenes, each with about ~100 camera views. For each scene, we capture 100 multi-view images of crowds.

- Files: Google Drive

- Project page

- If you use this dataset please cite:

Cross-View Cross-Scene Multi-View Crowd Counting.

,

In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR):557-567, Jun 2021.

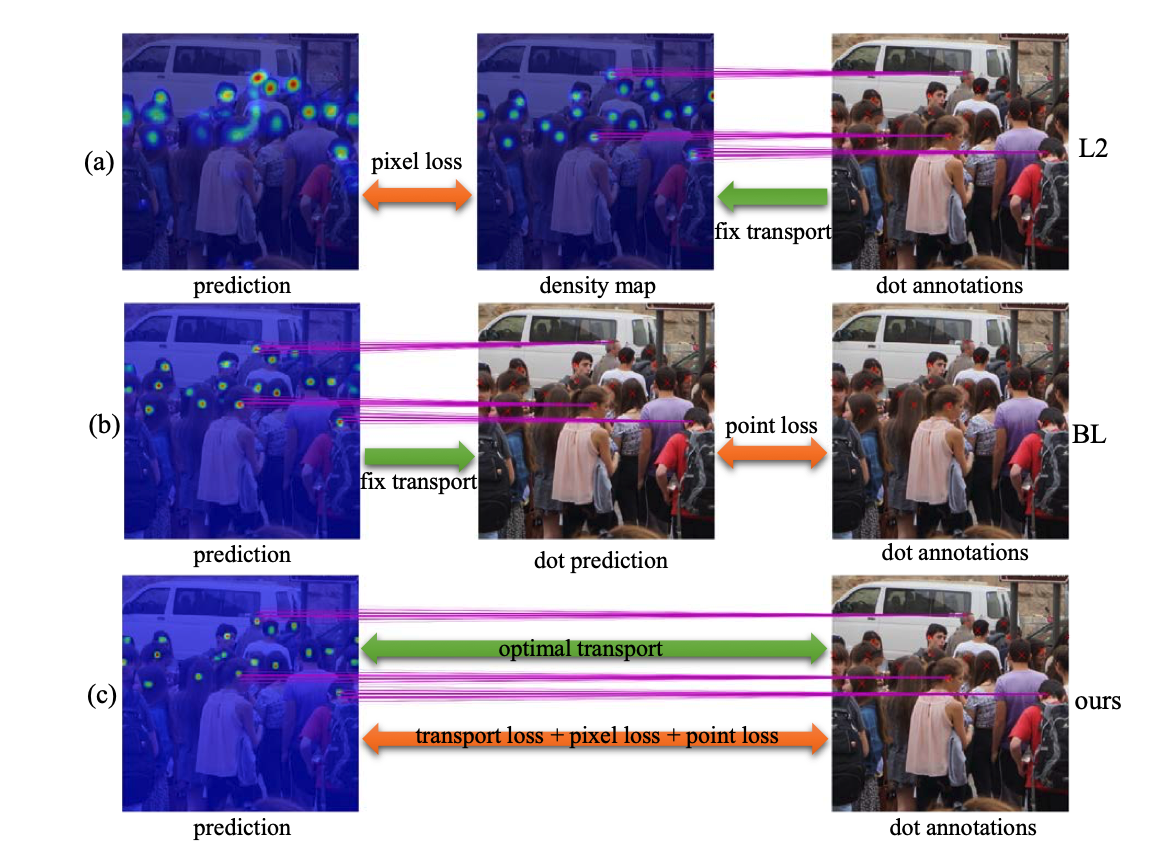

Crowd counting: Generalized loss function

Generalized loss function for crowd counting.

- Files: github

- Project page

- If you use this toolbox please cite:

A Generalized Loss Function for Crowd Counting and Localization.

,

In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2021.