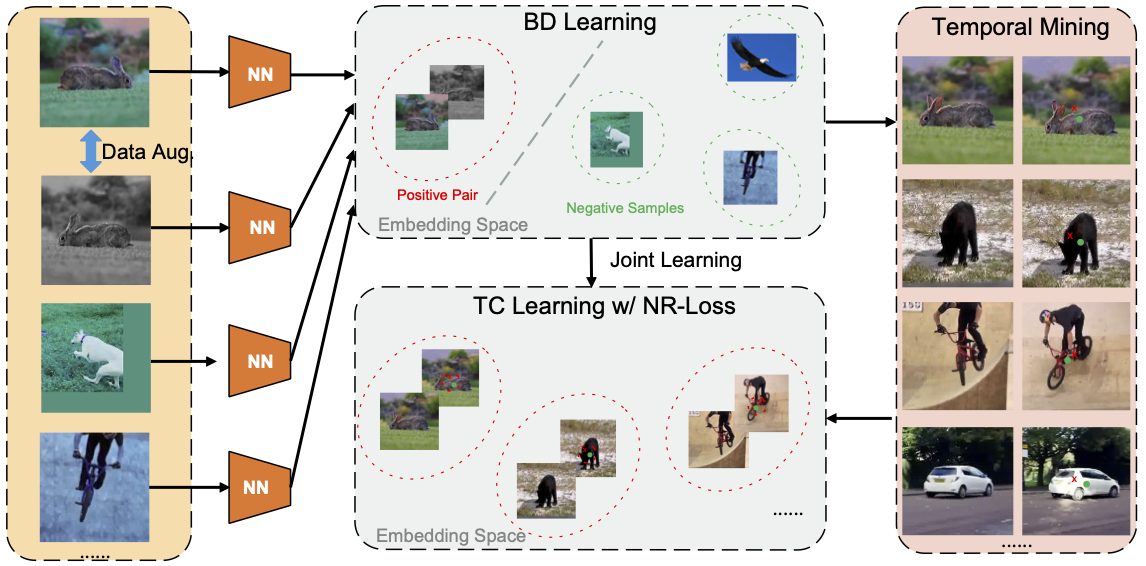

In this paper, we propose a progressive unsupervised learning (PUL) framework, which entirely removes the need for annotated training videos in visual tracking. Specifically, we first learn a background discrimination (BD) model that effectively distinguishes an object from background in a contrastive learning way. We then employ the BD model to progressively mine temporal corresponding patches (i.e., patches connected by a track) in sequential frames. As the BD model is imperfect and thus the mined patch pairs are noisy, we propose a noise-robust loss function to more effectively learn temporal correspondences from this noisy data. We use the proposed noise robust loss to train backbone networks of Siamese trackers. Without online fine-tuning or adaptation, our unsupervised real-time Siamese trackers can outperform state-of-the-art unsupervised deep trackers and achieve competitive results to the supervised baselines.

Selected Publications

- Progressive Unsupervised Learning for Visual Object Tracking.

,

In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2021 (oral). [supplemental]

Results