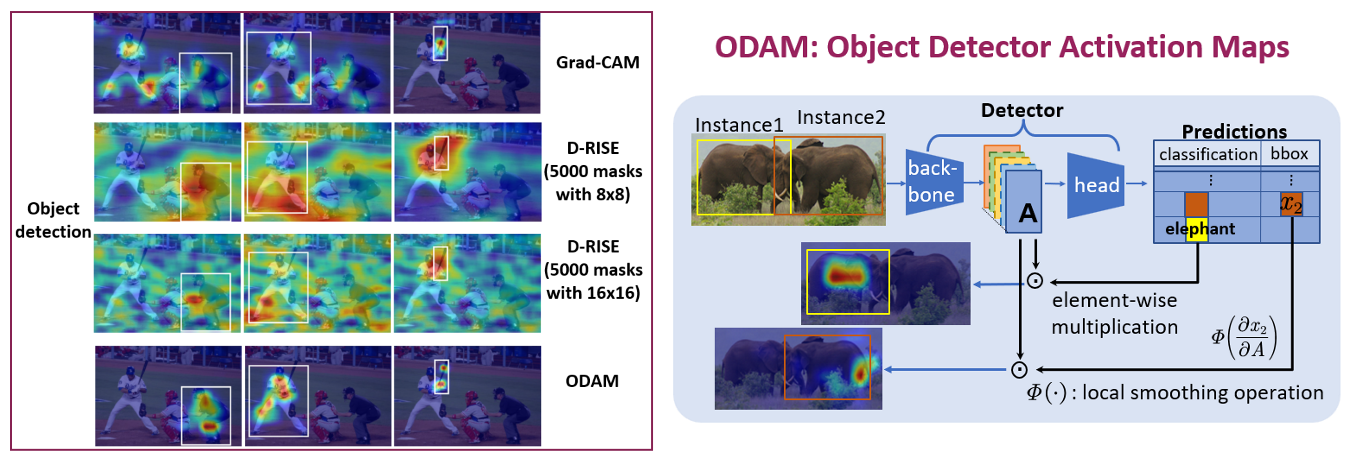

We propose the gradient-weighted Object Detector Activation Maps (ODAM), a visualized explanation technique for interpreting the predictions of object detectors. Utilizing the gradients of detector targets flowing into the intermediate feature maps, ODAM produces heat maps that show the influence of regions on the detector’s decision for each predicted attribute. Compared to previous works classification activation maps (CAM), ODAM generates instance-specific explanations rather than class-specific ones. We show that ODAM is applicable to both one-stage detectors and two-stage detectors with different types of detector backbones and heads, and produces higher-quality visual explanations than the state-of-the-art both effectively and efficiently. We next propose a training scheme, Odam-Train, to improve the explanation ability on object discrimination of the detector through encouraging consistency between explanations for detections on the same object, and distinct explanations for detections on different objects. Based on the heat maps produced by ODAM with Odam-Train, we propose Odam-NMS, which considers the information of the model’s explanation for each prediction to distinguish the duplicate detected objects. We present a detailed analysis of the visualized explanations of detectors and carry out extensive experiments to validate the effectiveness of the proposed ODAM.

We discuss two explanation tasks for object detection:

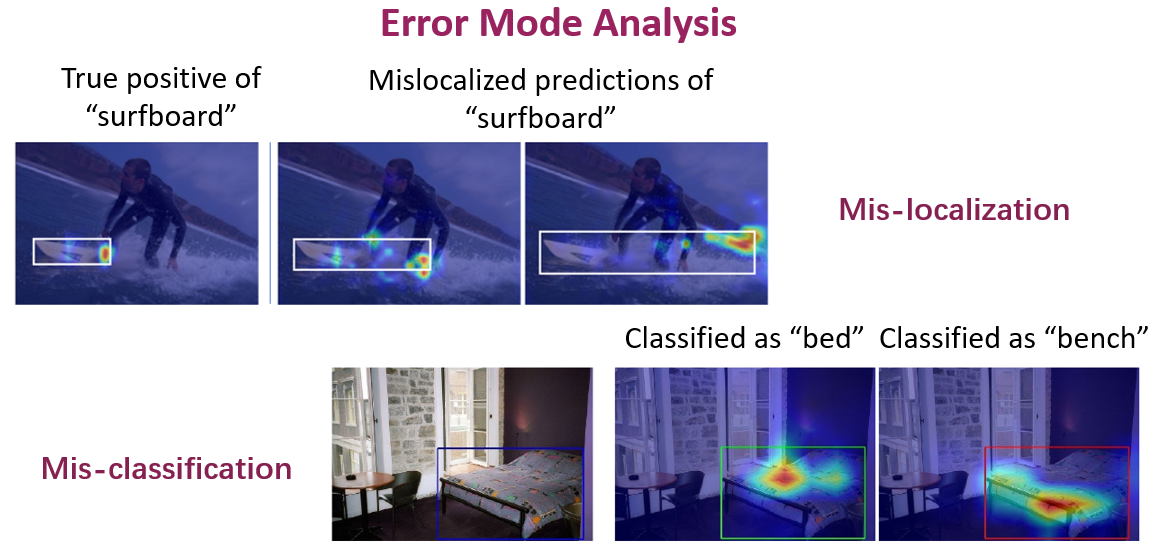

- Object Specification: the traditional explanation task, which aims to answer “What context/features are important for the prediction?” via a heat map that highlights the important regions for the final prediction. For example, ODAM can be used to analyze the error modes of detector.

- For the high confidence but poorly localized cases, we generate explanations of the wrong predicted extents and compare them with the correct localization results. The heat maps for the mislocalized “surfboard” highlight the visual features that induced to the wrong extents (the leg on the right, and the sea horizon).

- To analyze the classification decisions of the model, we generate explanations of the class scores. In the example, the model correctly classifies an instance as “bed” when seeing the bed’s cushion, but also mistakenly predicts “bench” based on a long metal bed frame at the end of the bed.

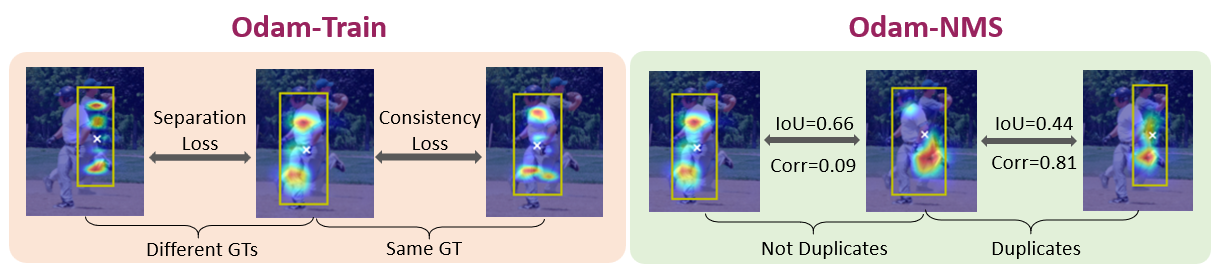

- Object Discrimination: the explanation task to answer “Which object was actually detected?”, which is a unique task for object detection where multiple classified objects exist. For the discrimination task, the visual explanation map is expected to show which instance was considered when the model made the prediction.

- Odam-Train: we propose a training method to improve the object discrimination ability of the visual explanation of the detector by encouraging visual explanations of different objects to be separated, and those of the same object to be the consistent.

- Odam-NMS: Based on the object discrimination ability of ODAM, we propose Odam-NMS, which uses the instance-level heat maps generated by ODAM with Odam-Train to remove duplicates and preserve overlapped predictions during NMS.

Selected Publications

- ODAM: Gradient-based Instance-Specific Visual Explanations for Object Detection.

,

In: Intl. Conf. on Learning Representations (ICLR), Rwanda, May 2023. [code] - Gradient-based Instance-Specific Visual Explanations for Object Specification and Object Discrimination.

,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), to appear 2024.

Results

- Evaluation Results of ODAM

- Code is available here.