One family of visual processes that has relevance for various applications of computer vision is that of, what could be loosely described as, visual processes composed of ensembles of particles subject to stochastic motion. The particles can be microscopic (e.g plumes of smoke), macroscopic (e.g. leaves blowing in the wind), or even objects (e.g. a human crowd or a traffic jam). The applications range from remote monitoring for the prevention of natural disasters (e.g. forest fires), to background subtraction in challenging environments (e.g. outdoor scenes with moving trees in the background), and to surveillance (e.g. traffic monitoring, crowd analysis and management). While traditional motion representations model the movement of individual particles (e.g. optical flow), which may be contrary to how these visual processes are perceived, recent efforts have advanced toward holistic modeling, by viewing video sequences derived from these visual processes as dynamic textures (Doretto et. al, IJCV 2003) or, more precisely, samples from a generative, stochastic, texture model defined over space and time.

The goal of this project is to develop a family of motion models that extends and complements the original dynamic texture model. These new models can solve challenging computer vision problems, such as motion segmentation and motion classification, and can be applied to interesting real-world problems, such as crowd and traffic monitoring. These models can also be applied to computer audition problems (music information retrieval), such as semantic music annotation and music segmentation.

Models

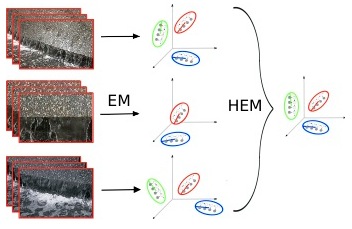

We propose a hierarchical EM algorithm capable of clustering dynamic texture models and learning novel cluster centers that are representative of the cluster members. DT clustering can be applied to semantic motion annotation and bag-of-systems codebook generation.

- , "Clustering Dynamic Textures with the Hierarchical EM Algorithm for Modeling Video." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 35(7):1606-1621, Jul 2013. [appendix]

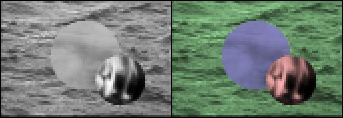

One disadvantage of the dynamic texture is its inability to account for multiple co-occuring textures in a single video. We extend the dynamic texture to a multi-state (layered) dynamic texture that can learn regions containing different dynamic textures.

- , "Layered dynamic textures." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 31(10):1862-1879, Oct 2009.

We introduce the mixture of dynamic textures, which models a collection of video as samples from a set of dynamic textures. We use the model for video clustering and motion segmentation.

- , "Modeling, clustering, and segmenting video with mixtures of dynamic textures." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 30(5):909-926, May 2008.

We introduce a kernelized dynamic texture, which has a non-linear observation function learned with kernel PCA. The new texture model can account for more complex patterns of motion, such as chaotic motion (e.g. boiling water and fire) and camera motion (e.g. panning and zooming), better than the original dynamic texture.

- , "Classifying Video with Kernel Dynamic Textures." In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, Jun 2007.

Computer Vision Applications

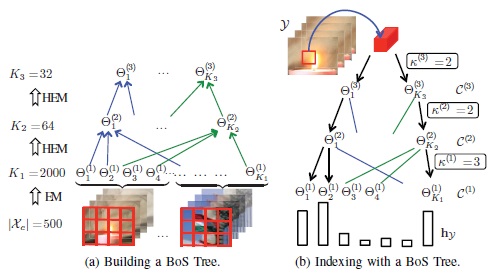

We propose the BoSTree that enables efficient mapping of videos to the bag-of-systems (BoS) codebook using a tree-structure, which enables the practical use of larger, richer codebooks.

- , "A Scalable and Accurate Descriptor for Dynamic Textures using Bag of System Trees." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 37(4):697-712, Apr 2015. [appendix]

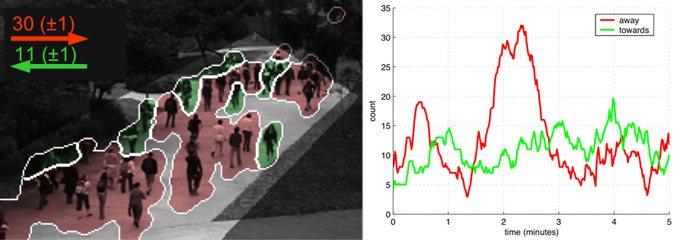

We estimate the size of moving crowds in a privacy preserving manner, i.e. without people models or tracking. The system first segments the crowd by its motion, extracts low-level features from each segment, and estimates the crowd count in each segment using a Gaussian process.

- , "Counting People with Low-Level Features and Bayesian Regression." IEEE Trans. on Image Processing (TIP), 21(4):2170-2177, May 2012.

The background model is based on a generalization of the Stauffer-Grimson background model, where each mixture component is a dynamic texture. We derive an on-line algorithm for updating the parameters using a set of sufficient statistics of the model.

- , "Generalized Stauffer-Grimson background subtraction for dynamic scenes." Machine Vision and Applications, 22(5):751-766, Sep 2011.

We introduce the mixture of dynamic textures, which models a collection of video as samples from a set of dynamic textures. We use the model for video clustering and motion segmentation.

- , "Modeling, clustering, and segmenting video with mixtures of dynamic textures." IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 30(5):909-926, May 2008.

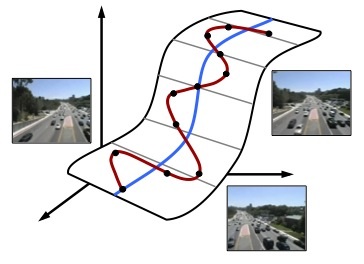

We classify traffic congestion in video by representing the video as a dynamic texture, and classifying it using an SVM with a probabilistic kernel (the KL kernel). The resulting classifier is robust to noise and lighting changes.

- , "Probabilistic Kernels for the Classification of Auto-regressive Visual Processes." In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, Jun 2005. [8-page version]

Computer Audition Applications

Dynamic textures can also be applied to modeling music signals as a time-series.

We propose an approach to automatic music annotation and retrieval that is based on the dynamic texture mixture, a generative time series model of musical content. The new annotation model better captures temporal (e.g., rhythmical) aspects as well as timbral content.

- , "Time Series Models for Semantic Music Annotation." IEEE Trans. on Audio, Speech and Language Processing (TASLP), 19(5):1343-1359, Jul 2011.

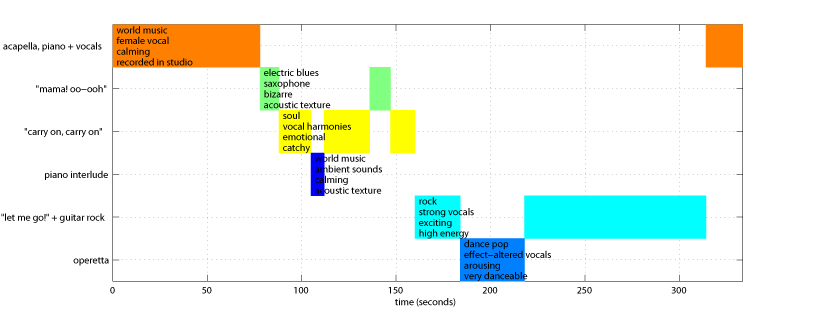

We model a time-series of audio feature vectors, extracted from a short audio fragment, as a dynamic texture. The musical structure of a song (e.g. chorus, verse, and bridge) is discovered by segmenting the song using the mixture of dynamic textures. The song segmentations are used for song retrieval, song annotation, and database visualization.

- , "Modeling music as a dynamic texture." IEEE Trans. on Audio, Speech and Language Processing (TASLP), 18(3):602-612, Mar 2010.

Selected Publications

- A Scalable and Accurate Descriptor for Dynamic Textures using Bag of System Trees.

,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 37(4):697-712, Apr 2015. [appendix] - Clustering Dynamic Textures with the Hierarchical EM Algorithm for Modeling Video.

,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 35(7):1606-1621, Jul 2013. [appendix] - A Bag of Systems Representation for Music Auto-tagging.

,

IEEE Trans. on Audio, Speech and Language Processing (TASLP), 21(12):2554-2569, Dec 2013. - Growing a Bag of Systems Tree for Fast and Accurate Classification.

,

In: IEEE Conf. Computer Vision and Pattern Recognition (CVPR), Providence, Jun 2012. - Generalized Stauffer-Grimson background subtraction for dynamic scenes.

,

Machine Vision and Applications, 22(5):751-766, Sep 2011. - Time Series Models for Semantic Music Annotation.

,

IEEE Trans. on Audio, Speech and Language Processing (TASLP), 19(5):1343-1359, Jul 2011. - Automatic music tagging with time series models.

,

In: International Society for Music Information Retrieval Conference (ISMIR), Utrecht, Aug 2010. - Modeling music as a dynamic texture.

,

IEEE Trans. on Audio, Speech and Language Processing (TASLP), 18(3):602-612, Mar 2010. - Clustering Dynamic Textures with the Hierarchical EM Algorithm.

,

In: IEEE Conf. Computer Vision and Pattern Recognition (CVPR), San Francisco, Jun 2010. [supplemental] - Analysis of Crowded Scenes using Holistic Properties.

,

In: 11th IEEE Intl. Workshop on Performance Evaluation of Tracking and Surveillance (PETS 2009), Miami, Jun 2009. - Layered dynamic textures.

,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 31(10):1862-1879, Oct 2009. - Variational Layered Dynamic Textures.

,

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, Jun 2009. - Derivations for the Layered Dynamic Texture and Temporally-Switching Layered Dynamic Texture.

,

Technical Report SVCL-TR-2009-01, Jun 2009. - Privacy Preserving Crowd Monitoring: Counting People without People Models or Tracking.

,

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, Jun 2008. - Modeling, clustering, and segmenting video with mixtures of dynamic textures.

,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), 30(5):909-926, May 2008. - Classifying Video with Kernel Dynamic Textures.

,

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, Jun 2007. - Layered Dynamic Textures.

,

In: Neural Information Processing Systems 18 (NIPS), Vancouver, Dec 2005. - Mixtures of Dynamic Textures.

,

In: IEEE International Conference on Computer Vision (ICCV), Beijing, Oct 2005. - Probabilistic Kernels for the Classification of Auto-regressive Visual Processes.

,

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, Jun 2005. [8-page version] - Classification and Retrieval of Traffic Video using Auto-regressive Stochastic Processes.

,

In: 2005 IEEE Intelligent Vehicles Symposium (IEEEIV), Las Vegas, Jun 2005. - Efficient Computation of the KL Divergence between Dynamic Textures.

,

Technical Report SVCL-TR-2004-02, Nov 2004. [a more efficient algorithm is discussed in my thesis]

Links

Here are links to more resources on Dynamic Textures: