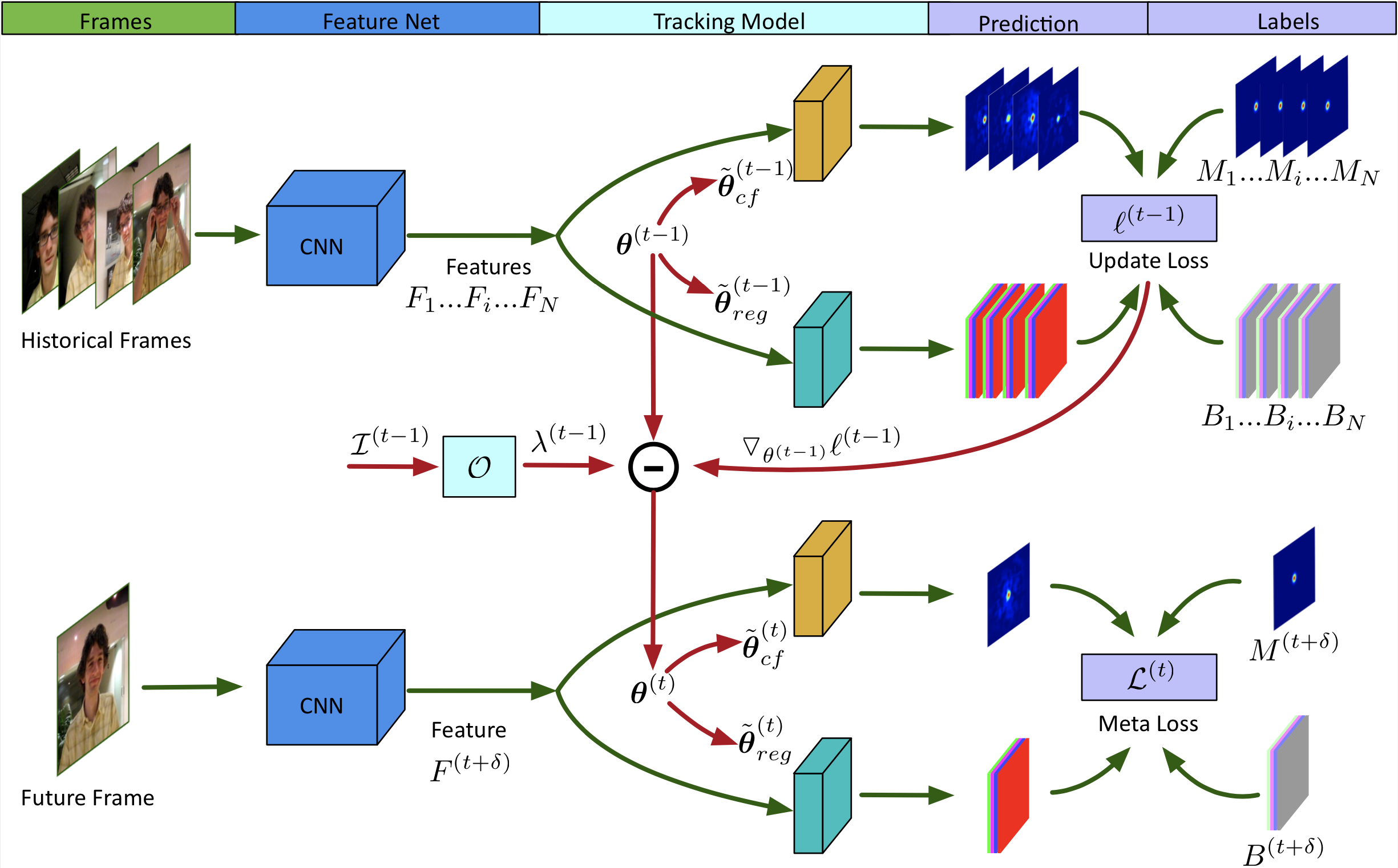

We propose a tracking framework which is composed of two modules: response generation and bounding box regression, where the first component produces a response map to indicate the possibility of covering the object for anchor boxes mounted on sliding-window positions, and the second part predicts bounding box shifts from the anchors to get refined rectangles. Instead of enumerating different aspect ratios of anchors as in SiamRPN, we propose to use only one sized anchor for each position and adapt it to shape changes by resizing its corresponding convolutional filter using bilinear interpolation, which saves model parameters and computing time. To effectively adapt the tracking model to appearance changes during tracking, we propose a recurrent model optimization method to learn amore effective gradient descent that converges the model update in 1-2 steps, and generalizes better to future frames. The key idea is to train a neural optimizer that can converge the tracking model to a good solution in a few gradient steps. During the training phase, the tracking model is first updated using the neural optimizer, and then it is applied on future frames to obtain an error signal for minimization. Under this particular setting, the resulting optimizer converges the tracking classifier significant faster than SGD-based optimizers, especially for learning the initial tracking model. In summary, our contributions are:

- We propose a tracking model consisting of resizable response generator and bounding box regressor, where only one sized anchor is used on each spatial position and its corresponding convolutional filter could be adapted to shape variations by bilinear interpolation.

- We propose a recurrent neural optimizer, which is trained in a meta-learning setting, that recurrently updates the tracking model with faster convergence.

- We conduct comprehensive experiments on large scale datasets including OTB, VOT, LaSOT, GOT10k and TrackingNet, and our trackers achieve favorable performance compared with the state-of-the-art.

Publications

- ROAM: Recurrently Optimizing Tracking Model.

,

In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, Jun 2020. [code]