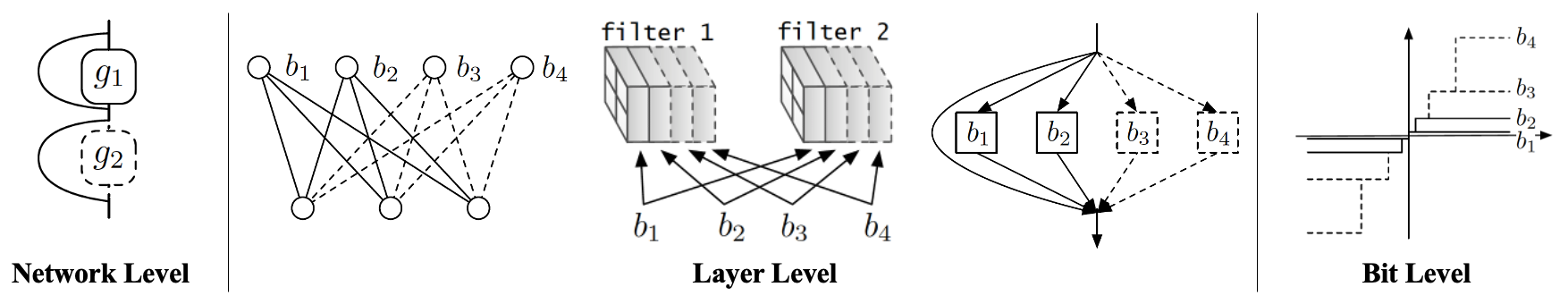

Neural network compression and quantization are important tasks for fitting state-of-the-art models into the computational, memory and power constraints of mobile devices and embedded hardware. Recent approaches to model compression/quantization are based on reinforcement learning or search methods to compress/quantize the neural network for a specific hardware platform. However, these methods require multiple runs to compress/quantize the same base neural network to different hardware setups. In this work, we propose a fully nested neural network (FN3) that runs only once to build a nested set of compressed/quantized models, which is optimal for different resource constraints. Specifically, we exploit the additive characteristic in different levels of building blocks in neural network and propose an ordered dropout (ODO) operation that ranks the building blocks by importance during training. Given a trained FN3, a fast heuristic search algorithm is run offline to find the optimal removal of components to maximize the accuracy under different constraints. Compared with the related works on adaptive neural network designed only for channels or bits, the proposed approach is unified for different levels of building blocks (network-level residual blocks; layer-level neurons, channels, and parallel residual paths; and bit-level quantization). Empirical results validate strong practical performance of the proposed approach.

One disadvantage of FN3 is that the dropout rate for ODO is fixed as a hyperparameter over different layers during the whole training process. Therefore, when nodes are removed, the performance decays in a human-specified trajectory rather than in a trajectory learned from data. Another drawback is the generated sub-networks are deterministic networks without well-calibrated uncertainty. To address these two problems, we develop a Bayesian approach to nested neural networks. We propose a variational ordering unit that draws samples for nested dropout at a low cost, from a proposed Downhill distribution, which provides useful gradients to the parameters of nested dropout. Based on this approach, we design a Bayesian nested neural network that learns the order knowledge of the node distributions. In experiments, we show that the proposed approach outperforms the nested network in terms of accuracy, calibration, and out-of-domain detection in classification tasks. It also out-performs the related approach on uncertainty-critical tasks in computer vision.

Selected Publications

,

In: International Joint Conf. on Artificial Intelligence (IJCAI), Yokohama, July 2020. [supplemental]

,

In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2021.