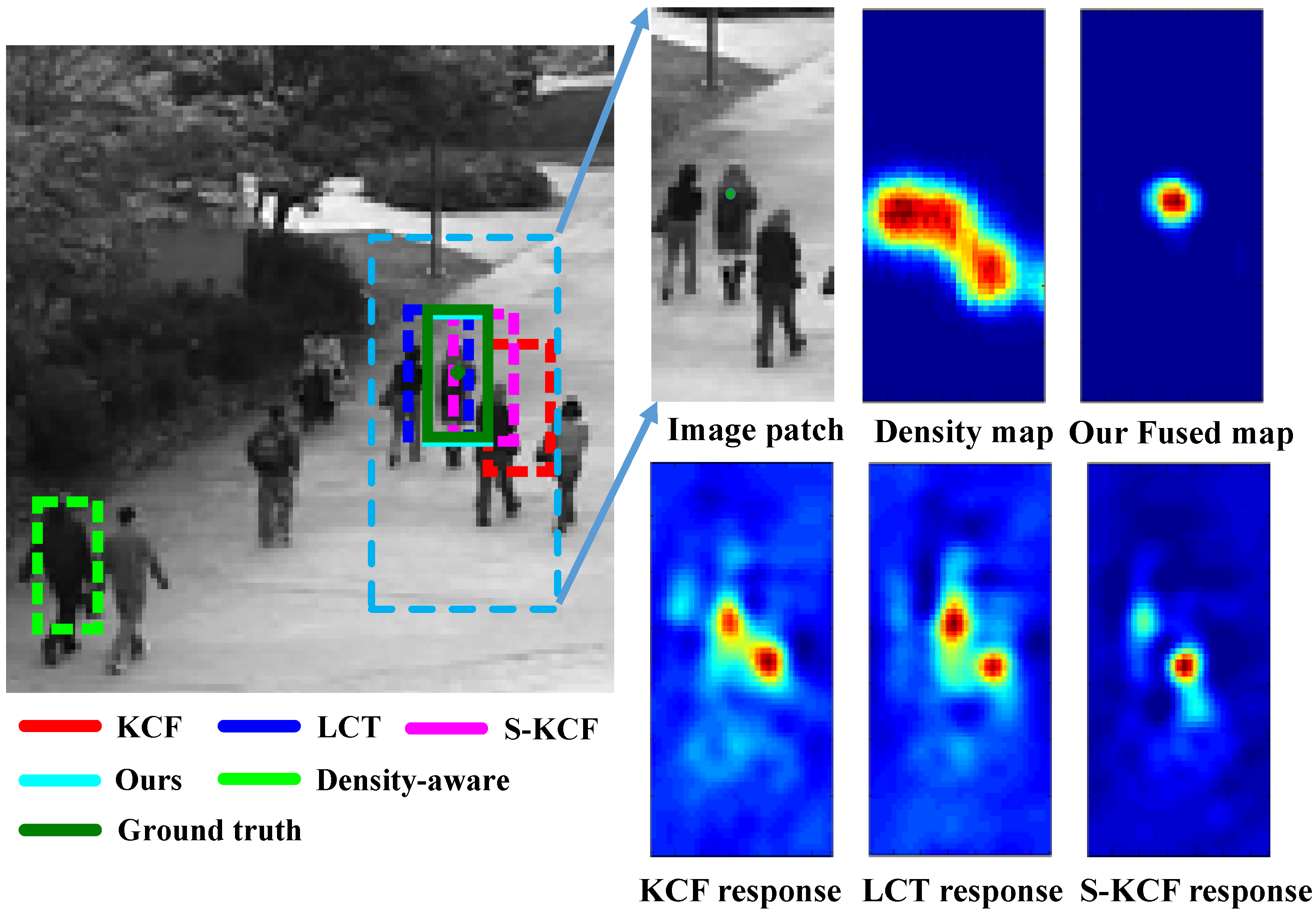

While visual tracking has been greatly improved over the recent years, crowd scenes remain particularly challenging for people tracking due to heavy occlusions, high crowd density, and significant appearance variation. To address these challenges, we first design a Sparse Kernelized Correlation Filter (S-KCF) to suppress target response variations caused by occlusions and illumination changes, and spurious responses due to similar distractor objects. We then propose a people tracking framework that fuses the S-KCF response map with an estimated crowd density map using a convolutional neural network (CNN), yielding a refined response map. Our density fusion framework can significantly improve people tracking in crowd scenes, and can also be combined with other trackers to improve the tracking performance. The figure shows an example of people tracking in crowd scene using different trackers: KCF, S-KCF (ours), long-term correlation tracker (LCT), density-aware, and our proposed fusion tracker. The response maps of the raw trackers (bottom-right) are greatly affected by the objects surrounding the target due to their similar appearances. Compared to KCF and LCT, our S-KCF partially suppresses the spurious responses using a sparsity constraint. Our density fusion framework combines the S-KCF response map with the crowd density map, and effectively suppresses the irrelevant responses and detects the target accurately (top-right).

Selected Publications

- Fusing Crowd Density Maps and Visual Object Trackers for People Tracking in Crowd Scenes.

,

In: IEEE Conf. Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Jun 2018.

Demos/Results

- The fusion tracking progress (avi)

- A video result on PETS2009 (avi)

- A video result on UCSD (avi)